WEF: "The global outlook is increasingly fractured"

This year's Annual Risks Report from the World Economic Forum highlights growing global challenges, but concludes technological risks are still under the radar for many

This week some of the the world’s most prominent leaders, influencers, and thought-leaders, will be gathering in the Swiss town of Davos for the Annual Meeting of the World Economic Forum. And one of the topics on the agenda will be the just-released WEF Global Risks Report — now in its 25th year.

I’ve been following and occasionally contributing to the report through WEF’s survey of experts for almost as long as it’s been around. And despite its flaws, I’ve always found it to be an essential resource for understanding the emerging global risk landscape around everything from planetary health and geopolitical stability, to human flourishing.

And over the years I’ve been especially interested in how technological risks are framed and ranked.

Last year I wrote two long articles about the history of technology risks in the annual report over its lifetime, and the positioning of technology-based risks at the start of 2024. I’d highly recommend reading both of these as they provide important context to this years’ report.

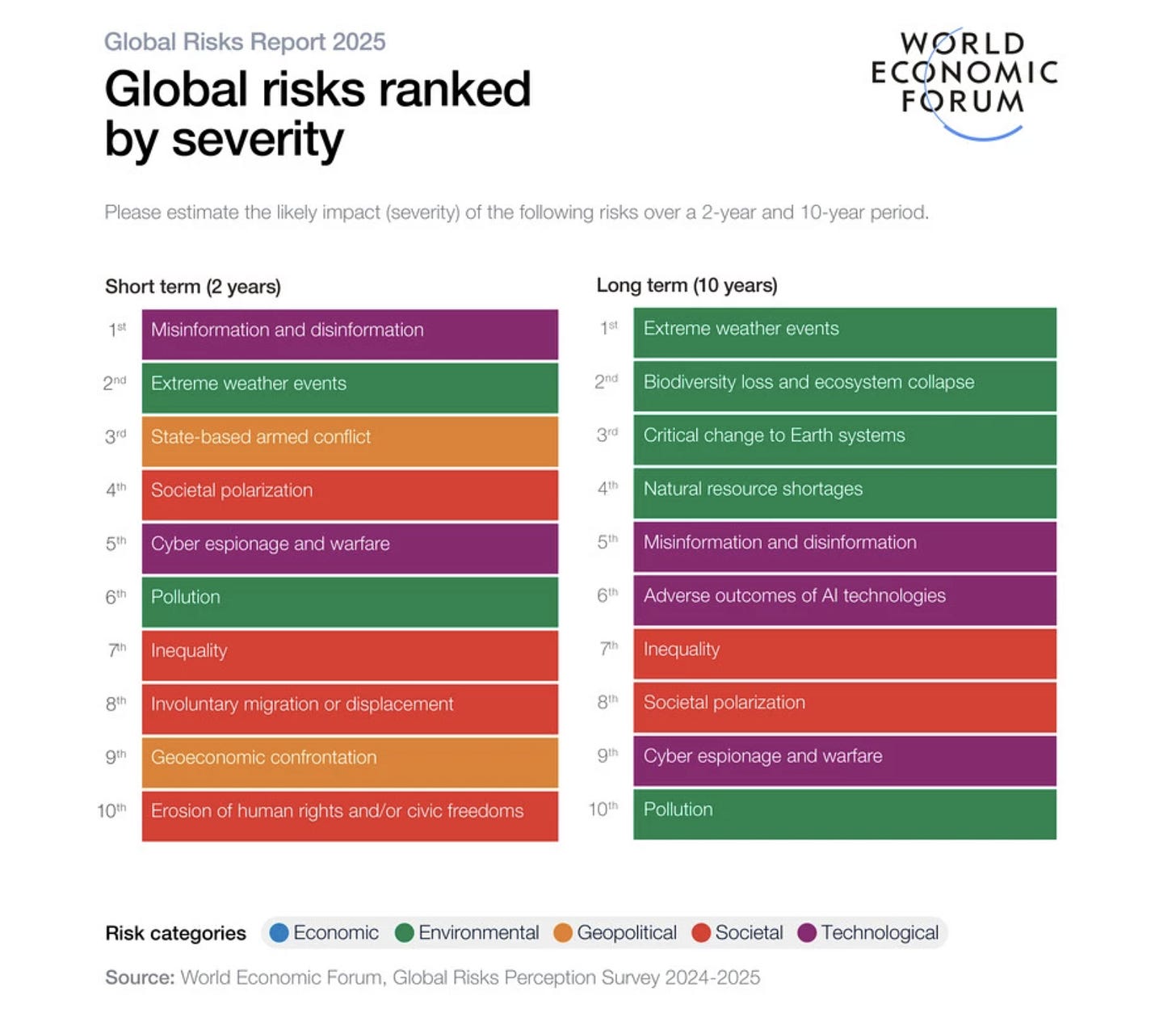

But to this year’s report. Perhaps surprisingly given all the attention it’s been getting, the 2025 report does not rank the emerging risks of AI as that high.

As the report’s authors note, “In a year that has seen considerable experimentation by companies and individuals in making the best use of AI tools, concerns about Adverse outcomes of AI technologies are low in the risk ranking on a two-year outlook.”

Critics of over-hyped AI risks will, I suspect, take this as signal that saner minds are seeing through the speculation. But I suspect it’s more likely that most of the experts polled for the report simply do not grasp how disruptive the technology may turn out to be, and how fast it’s moving.

This is hinted at in the report as it notes that “complacency around the risks of such technologies should be avoided given the fast-paced nature of change in the field of AI and its increasing ubiquity.”

It goes on to acknowledge that the 10-year outlook for AI technologies-related risks “is one of the risks that climbs the most in the 10-year risk ranking compared to the two-year risk ranking”.

In this year’s report, adverse outcomes of AI technologies is ranked 32nd over the next 2 years, and 6th over the next 10 years (the full list is at the bottom of this article).

In comparison, in 2024 it was 29th in the 2-year outlook, but still at 6th in the 10-year outlook.

It is worth pointing out however that the risks of misinformation and disinformation, which are increasingly being driven by AI, rank 4th in the top ten risks — suggesting that while AI is an enabling technology, it’s the specific risks it contributes to that are grabbing attention.

The same is likely true for the risks of “adverse outcomes of frontier technologies”. These cover “Intended or unintended negative consequences of advances in frontier technologies on individuals, businesses, ecosystems and/or economies. Includes, but is not limited to: brain-computer interfaces, biotechnology, geo-engineering and quantum computing” and, like AI, rank low.

AI was split out of this category last year, which goes some way to understanding its low ranking: This year adverse outcomes from this cluster of advanced technologies was ranked 33rd out of 33 risks over the next 2 years (it was 34th out of 34 last year), and 23rd over a 10-year outlook (24th last year).

One of the flaws of the annual risk report is that the opinions it represents tend to regress to the mean in terms of understanding and perception. This isn’t necessarily bad — it helps avoid a dangerously high focus on speculative risks at the expense of more immediate and plausible concerns. But it does have a tendency to devalue risks that are poorly understood by a broad base of mainstream experts.

I would put frontier technologies and advanced AI in this category. When the disruptions come, they will be disruptions which — in many cases — will probably be “I told you so” moments for people immersed in the various fields, but blindsides to many other professionals and experts — including contributors to the WEF annual global risks survey that feeds into the report.

That said, the report does highlight a deeply complex and interconnected risk landscape that anyone — or any institution — at the forefront of grappling with how to ensure our collective future is a positive one, should pay close attention to. Because, despite it’s limitations, it does paint a picture of a coming future that will require deeply coordinated global action at unprecedented levels to ensure it’s a positive one — including on AI and other frontier technologies.