Navigating “orphan risks” has never been more important for tech companies — especially around AI

Technology companies need to get smarter in how they approach social, ethical and governance risks that have a habit of getting in the way of good ideas

In 2018 I published an article on “orphan risks” and tech startups. This drew on the work we were doing at Arizona State University that led to the launch of the Risk Innovation Nexus, and it fleshed out our approach to risks that are often ignored because they’re hard to quantify, and yet have a habit of stymying tech companied that are technologically creative yet socially naive.

Back then we weren’t focusing explicitly on artificial intelligence. But over the past year it’s become increasingly clear that there’s a growing need for AI researchers, developers and policy makers to familiarize themselves with the concept of orphan risks, and how to approach navigating them.

This was brought home to me yesterday while reading a tweet from Ross Taylor on the failed Google Galactica foundation model:

Ross was the first author on the Galactica paper and, as he describes it, it was a classic case of a ground-breaking idea with substantial potential that was scuppered by a lack of awareness of a deeply complex risk landscape.

Given a growing need for new thinking on risk in the context of advanced technology transitions — and especially AI — I thought it worth reposting that 2018 article. This is pretty much as it was published back then, with a couple of editorial tweaks to bring it up to date:

Orphan Risks

In 2002, Donald Rumsfeld famously sliced and diced the world of uncertainty into three chunks: the known knowns, the known unknowns, and the unknown unknowns.

Rumsfeld was talking about the lack of evidence for weapons of mass destruction in Iraq, but his three types of uncertainty have since been widely used to characterize different types of risks, from those we have a pretty good handle on, to those that we don’t even know exist yet. What these divisions fail to highlight though is a category of risks that are “known knowns” if you’re looking in the right place, but aren’t taken as seriously as they should be, and as a result are threatening to trip up a growing number of enterprises — including startups.

These are so-called “orphan risks” — risks that are perceived as being too ill-defined, too complex, or too irrelevant to be worth paying attention to, yet have the power to derail entire enterprises down the line if they’re not paid attention to.

The concept of orphan risks is a new one, although the underlying reality is not. Anyone who’s set out to establish a new company knows that the path between good ideas and long-term success is paved with unexpected hurdles and hidden pitfalls. Yet beyond the rather conventional risk-mantras of “don’t harm anyone (if possible)”, “don’t mess the environment up (if you can help it)”, “don’t let your investors down (especially the powerful ones)”, and “don’t break the law (or at the least, don’t get caught)”, startups and their investors are remarkably poor at planning for less-obvious risks, especially when they involve the often-complex dynamic between emerging technologies and society.

This is where misjudging or simply being blissfully unaware of orphan risks can deeply undermine a startup’s long-term success. These include the often-orphaned risks associated with ethically ambivalent behavior, or acting in ways that threaten deeply held values, or embracing emerging technologies that are perceived to come with highly unusual and uncertain dangers.

In many cases, these are socially-oriented risks that don’t lend themselves to easy quantification and straight forward risk management tools. Often, the lack of clarity and certainty associated with social risks, and the sheer messiness that many of them represent, lead to them being overlooked. Yet, these risks are playing a growing role in underpinning success — as companies like Uber and Facebook are finding out their cost. Underlining this Kara Swisher recently mused in the New York Times “I think we can all agree that Silicon Valley needs more adult supervision right about now.”

The growing gap between new technologies and old risk tools

A large part of the challenge around orphan risks is the growing chasm between tried and tested ways of thinking about risk, and the types of risks that are increasingly likely to trip companies up. Powerful new technologies like artificial intelligence, gene editing, advanced manufacturing and others, are rewriting the rule book on what is possible. And when combined with an increasingly interconnected global society, they are transforming the risk landscape that lies between new ideas and their successful implementation. This is a landscape that conventional risk thinking — and the tools that go along with it — can only get businesses so far.

It’s this gap between emergent risks and conventional risk management approaches that is behind the idea of “risk innovation”. Simply put this is the understanding that, in order to thrive in a world driven by innovation and the changes it brings, we need parallel innovation in how we think about and act on risk.

This deceptively simple idea is the basis for the Arizona State University Risk Innovation Nexus. which I direct. In the Lab, we are exploring new ways of understanding the risk landscape around the development and use of new technologies and how to successfully navigate it.

And one of these new ways of understanding is reframing risk as a “threat to value”, or more specifically, a threat to something of importance to an individual, a community, or a business organization.

Risk as a Threat to Value

Approaching risk as a threat to value is, admittedly, a somewhat subjective way of thinking about risk. Yet it has the advantage of opening up conversations around factors that are often highly relevant to the decisions people make, and yet because they are hard to quantify, are frequently ignored. These include risks such as threats to autonomy, dignity, trustworthiness, self-esteem, way of life, and even deeply held beliefs and convictions.

This way of thinking about risk extends conventional thinking rather than replacing it — health, wealth and the environment all fit comfortably into the “value” bucket. But it also allows organizations to begin thinking about the impacts of less tangible risks on their business, including the reciprocal dangers of threatening what is important to others through what they do.

Unfortunately, such “things of importance” are often relegated to the “orphan” category. This is a mistake though. Quite apart from the ethical inappropriateness of ignoring actions that could present deeply impactful social risks to others, these orphan risks are precisely the types of risks that can end up blindsiding companies if they haven’t invested up-front in addressing them. They include risks that range from cultures and practices that lead to discrimination and social exclusion, to products that threatens social equity and livelihoods, to actions and attitudes that end up undermining public trust and good will.

Few of these orphan risks are overtly governed by laws and regulations. Yet many of them elevate the chances of failure of they are not attended to.

New tools for navigating the orphan risk landscape

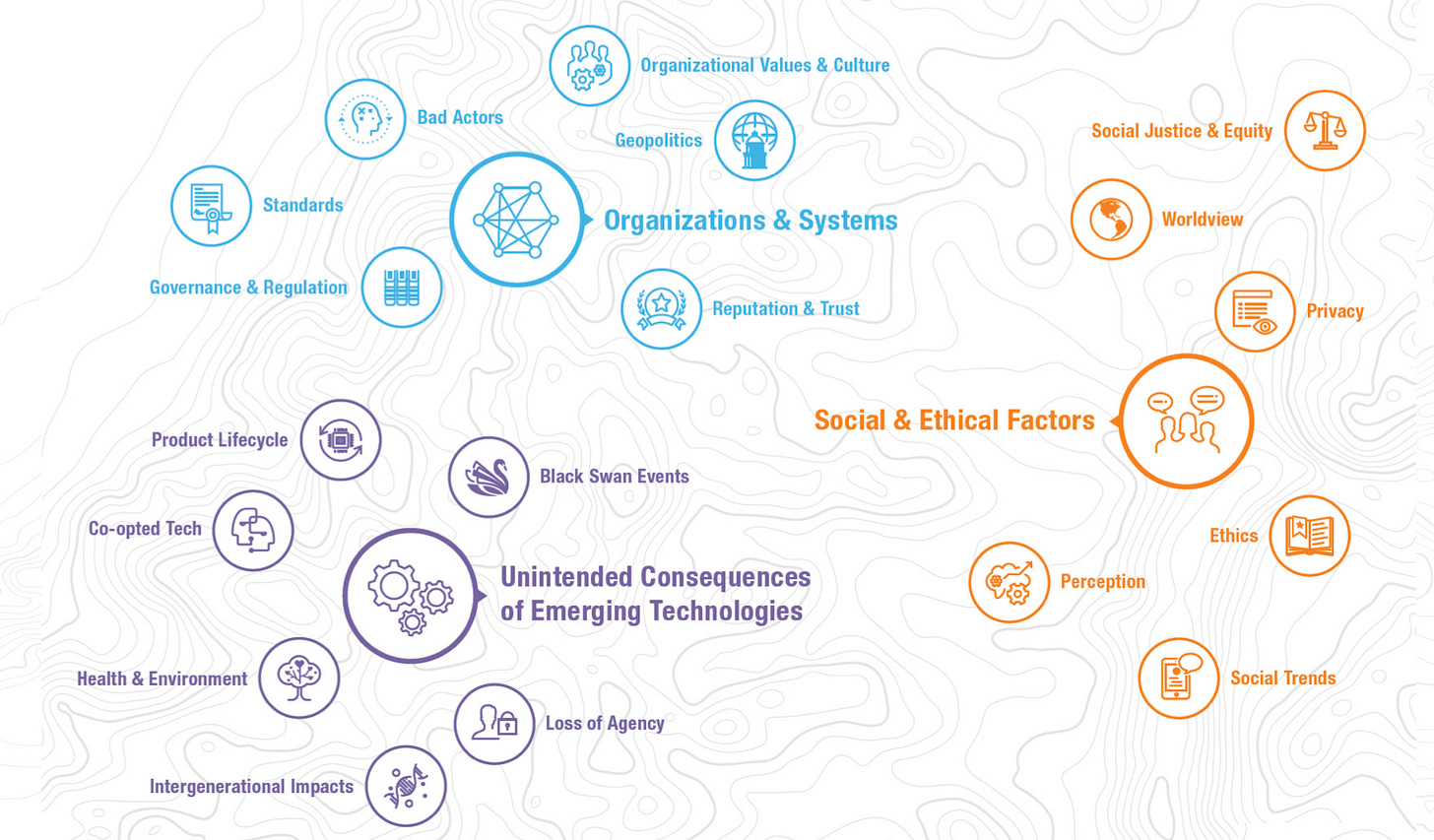

Here, the ASU Risk Innovation Nexus is working on ways to help organizations identify and navigate orphan risks; especially those associated with organizational values and culture, the development and use of emerging technologies, and broader societal impacts. We’re building tools that are designed to help startups and their investors reduce the chances of being blindsided by risks that, with a little up-front care and attention, can make the difference between success and failure.

Of course, plenty of startups will continue to keep their orphan risks out in the cold, perhaps telling themselves that they’ll think about them when they’re more established, or even that they are not that important. But as Mozilla’s Mitchell Baker recently observed, there’s a growing need for the tech sector to rethink how they do things, lest they produce a new generation of technologists with blindspots that will, in her words, “come back to bite us.”

The question is, how many startups and funders are going to be smart enough to increase their chances of success by taking their orphan risks seriously?

Footnotes

For a full explanation of orphan risks, along with a set of tools and training materials on applying a risk innovation mindset to the development and use of emerging technologies, it’s worth spending some time on the Risk Innovation Nexus website. You can also fund a summary of our work here: Why Risk Innovation is critical to the futures we aspire to.

For an example of how the risk innovation approach can be applied to emerging technologies (in the case brain machine interfaces) it’s worth checking out the paper “The Ethical and Responsible Development and Application of Advanced Brain Machine Interfaces”

If you’re interested more specifically in how the risk innovation approach — and the concept of orphan risks — applies to AI, this National Academies-sponsored presentation and the accompanying slide deck is a good place to start: Thinking differently about AI and risk.

And finally, the underlying concepts behind risk innovation are laid out in this commentary in the journal Nature Nanotechnology on why we need risk innovation.