Elon Musk's Neuralink plays "mind games" in more ways than one

Neuralink has released a video of its first patient successfully using an implanted brain computer interface. It's impressive, but there's still a steep hill to climb if the tech is to be successful

It was only a few weeks ago that I was writing about the first patient to receive an embedded wireless brain computer interface as part of Neuralink’s PRIME study. This afternoon, the company released a new video on the platform X of that patient doing well and controlling a computer with their thoughts:

The video shows Neuralink’s first patient deftly playing chess on a computer screen, talking about playing Civilization 6 at all hours (something that was impossible before), and extolling the virtues of the implant — all through thinking.

This isn’t the first time someone has been shown controlling devices with their mind using a Brain Computer Interface, or BCI — in fact in research circles scientists and medical professionals have been able to achieve this for some years using interfaces like the Utah Array.

So what makes today’s demonstration different?

First off, I have to say that the hype is a large part of it. Elon Musk has been using the term “telepathy” for a while with respect to the Neuralink BCI, and today was no exception. It’s the type of hyperbole most serious medical professionals and research scientists would avoid like the plague, but not Musk.

This underlies quite a marked difference between what Neuralink are doing and much of the medical research and advancements that have gone before. As a young and ambitious tech company, Neuralink follow a different playbook to many research labs and more mature companies. Its North Star is the “fail fast fail forward while changing the world at lightning speed” mindset of Silicon Valley. And within this mindset, it’s the reality you can convince people to buy into that matters as much as the tech itself.

It’s an approach that’s worked for many non-medical technologies — the sort of “fake it ’till you make it” reality distorting perspective that persuaded us that what we needed more than anything were smart phones, tablets, social media, cars that drive themselves and a whole lot more.

I’m not begrudging this approach as it’s clearly worked in many cases — in part because people are incredibly good at taking innovative ideas and products finding new ways to use them. But can the same approach work for BCIs?

I think there’s a chance that it might. But there are some very substantial red flags here. Certainly Neuralink has taken existing science and gone a long way toward moving it from the lab to a more commercially viable product. Their robotic implant technology paves the way to mass deployment of the tech; the implants are smaller and have more electrodes than the alternatives. And the Neuralink device has much more of the feel of a consumer product than a research device — the company would have you imagine a future where getting one isn’t that different from having LASIK surgery rather than being admitted to hospital to have an unflattering bundle of wires attached to your skull.

And this is the other thing that makes today’s demo different to much of the other stuff you may read or watch about BCIs — it makes Neuralink’s tech feel accessible and attractive; a suite of high end capabilities wrapped up in cool consumer tech that’s, let’s admit it, sexy.

Of course, the reality couldn’t be further from the truth. But the reality distortion field is real, and I suspect that critics will discount it at their peril.

And this approach may even work. The Silicon Valley mindset could succeed in accelerating this technology where slow and steady medical research has not.

But this path to a BCI future is, I suspect, still paved with some rather gnarly pitfalls.

Back in 2019 I published a paper on the ethical and responsible development and application of advanced brain machine interfaces with my colleague Marissa Scragg. It’s a paper that was requested in direct response to the initial Neuralink paper.

As part of this, we speculated on areas of value associated with Neuralink and the company’s key stakeholders, and the most likely “orphan risks” that lay between the present and company’s success.

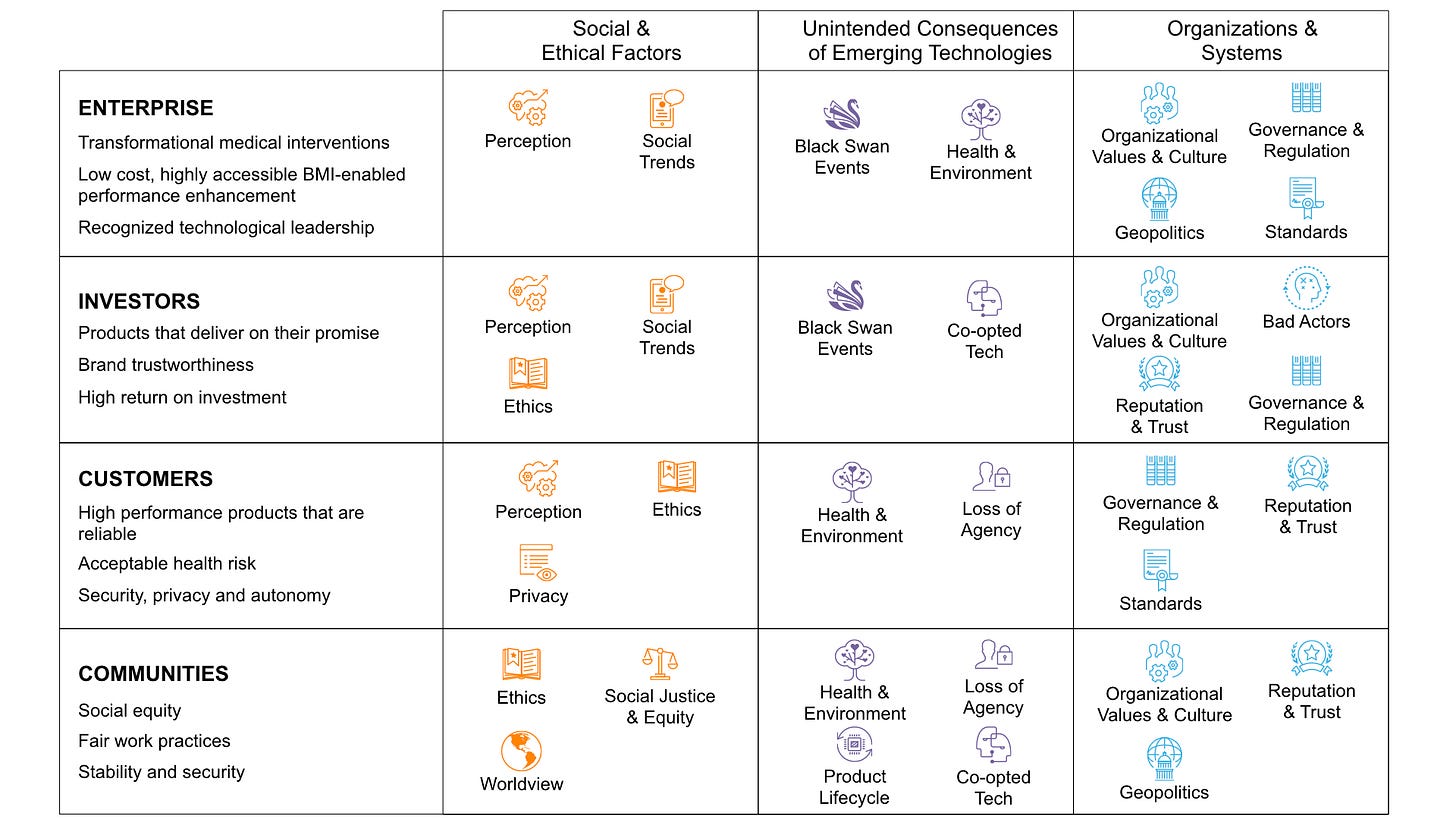

These were summarized in the figure below — you can get some of the background to the analysis from the paper or on the Risk Innovation Nexus website (which described this approach), but in essence the figure maps out easy-to-overlook risks that Neuralink should probably be looking out for.

As we wrote back then:

“Figure 1 provides an example of what this metaphorical “risk landscape” might look like for the brain machine interface, as described by Musk et al. The approach is designed to provide a snapshot of areas that warrant further attention and to illuminate potential risk clusters that may otherwise remain obscured. It is not inclusive or comprehensive and does not include many conventional risks for which there are established risk assessment and mitigation frameworks, for example, cyber security. Rather, it provides a starting point for mapping out orphan risks that have the potential to directly or indirectly affect development and that could raise substantial issues around ethical and socially responsible innovation …

“Within this context, the mapping presented in Figure 1 can be interpreted in a number of ways. First, it provides insights into orphan risks that are worth the enterprise being aware of. In the case of advanced brain machine interfaces, how investors, users, and others perceive the potential workings and impacts of the technology are flagged as important, as are social trends that may either create opportunities for development or potential barriers—for instance, if there is a public backlash against brain machine interface-based augmentation. Perhaps, not surprisingly, for an invasive neural read-write technology, novel health impacts are flagged, as are fundamentally unpredictable “black swan” events. In this case, the mapping suggests a high level of attention should be paid to enterprise and technology agility with respect to navigating around unexpected hurdles …

“Reading across the map, there is a greater density of orphan risks identified under “Organizations and Systems,” suggesting that some of the greatest potential threats to successful advanced brain machine interface technologies lie within the enterprise and the formal organizations governing how it operates. These include the degree to which organizational values and culture align with how the technology is developed and used, evolving regulations that may play an outsized influence on development pathways, and national and international standards that guide and limit technology performance and use.

“Second, the risk landscape in Figure 1 provides insights into potential threats to value within key constituencies, which may, in turn, lead to barriers to success. Here, orphan risks that do not directly impact the enterprise, but are likely to influence its success, are highlighted. These include the ethics surrounding how the technology is developed and used, how it potentially leads to social injustice, and whether it raises privacy concerns. This broader perspective on orphan risks also flags possible issues such as how trustworthy the enterprise is perceived to be and the dangers of “bad actors” giving the technology a negative reputation that risks poisoning the market.

“A third way the landscape in Figure 1 can be read is by identifying risks that cluster and converge in ways that increase the chances of truly blindsiding impacts. Certain risks dominate the landscape, including those associated with ethics, health, governance, and regulation. If not planned for, these could build upon each other to create insurmountable obstacles to value creation. Instead of planning for just one risk at a time, analysis and understanding of these clusters have [sic] the potential to provide insights into where resources are best focused to protect stakeholder value. Here, the analysis suggests that some of the more substantial near-time challenges to ensuring successful, responsible, and ethical advanced brain machine interfaces are associated with organizational systems and operations. These include internal processes such as the development and application of guiding principles and expectations of ethical and responsible behavior. However, they also extend to professional bodies, regulatory agencies, and communities of practice that form the broader ecosystem within which advanced brain machine interfaces are conceived, developed, and used. Here, Figure 1 also highlights orphan risks that occur multiple times such as ethics, perception, health and environmental impacts, and reputation and trust. These (and similar risk clusters) provide insights into where particular attention is most likely needed to support successful and responsible development.”

Looking back, this “risk landscape” still stands up reasonably well. Neuralink has avoided some of the potential early pitfalls by going slower than they might of, focusing on medical applications first, and working with regulators. As a result, many near term pitfalls have been avoided or pushed to the future.

However, I suspect that the next couple of years is where the risks associated with a tech company with go-faster stripes working on BCIs will really kick in. And one area that I most confess I worry about a lot is long term patient autonomy and care.

One of the first times I wrote about Neuralink’s tech was in the book Films from the Future: The Technology and Morality of Sci-Fi Movies back in 2018. Part of the relevant chapter looked at the thorny issue of who “owns” you if your health — your life even — depends on technology that a company has implanted and has control over.

For patients receiving Neuralink BCIs, their health and wellbeing is intertwined with the company from the moment they receive that implant. If there are bugs in the tech or vulnerabilities, if updates are needed, if the wireless interface goes wonky — or the app glitches, or if Neuralink decides that patents need to sign up for a paid subscription service to receive continuing support, the patient is beholden to the company.

This is already the case with other medical implants, and it’s a growing challenge. But what happens when the company providing the implants comes from a sector where monetizing the customer is the name of the game?

And worse, what happens if the company goes bust? What happens to the patients if they are left with a legacy tech in their heads with no hope for continuing service and support?

These are questions that are relevant to any medical company providing embedded or implanted tech. But they are especially relevant to a go-faster tech company that’s messing with people’s heads.

Again, it may be that things work out just fine — and I must confess that I’m excited by what Neuralink is achieving. But the company is only just setting out on a long road full of pitfalls. And it’s one where trips and falls could have a profound impact on the very people the company’s trying to help.

I'd add real world evidence, conducting a clinical trial, pushing into healthcare markets with established medical device competitors as further hurdles. The FAA might allow rockets to blow up over the sea, but the FDA takes a very dim view of patients being harmed in their seats.

If Elon carries this advancement with the same rigor as space X even if there is an issues, he's likely to use it for major improvements.