Considering ethics now before radically new brain technologies get away from us

Imagine infusing thousands of wireless devices into your brain, and using them to both monitor its activity and directly influence its…

Imagine infusing thousands of wireless devices into your brain, and using them to both monitor its activity and directly influence its actions. It sounds like the stuff of science fiction, and for the moment it still is — but possibly not for long.

Brain research is on a roll at the moment. And as it converges with advances in science and technology more broadly, it’s transforming what we are likely to be able to achieve in the near future.

Spurring the field on is the promise of more effective treatments for debilitating neurological and psychological disorders such as epilepsy, Parkinson’s disease and depression. But new brain technologies will increasingly have the potential to alter how someone thinks, feels, behaves and even perceives themselves and others around them — and not necessarily in ways that are within their control or with their consent.

This is where things begin to get ethically uncomfortable.

Because of concerns like these, the U.S. National Academies of Sciences, Engineering and Medicine (NAS) are cohosting a meeting of experts this week on responsible innovation in brain science.

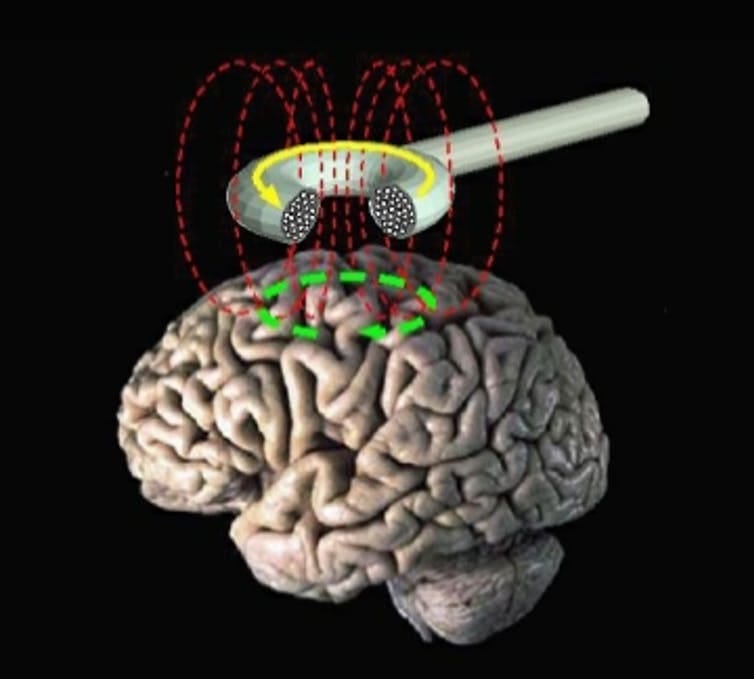

Berkeley’s ‘neural dust’ sensors are one of the latest neurotech advances.

Where are neurotechnologies now?

Brain research is intimately entwined with advances in the “neurotechnologies” that not only help us study the brain’s inner workings, but also transform the ways we can interact with and influence it.

For example, researchers at the University of California Berkeley recently published the first in-animal trials of what they called “neural dust” — implanted millimeter-sized sensors. They inserted the sensors in the nerves and muscles of rats, showing that these miniature wirelessly powered and connected sensors can monitor neural activity. The long-term aim, though, is to introduce thousands of neural dust particles into human brains.

The UC Berkeley sensors are still relatively large, on par with a coarse piece of sand, and just report on what’s happening around them. Yet advances in nanoscale fabrication are likely to enable their further miniaturization. (The researchers estimate they could be made thinner than a human hair.) And in the future, combining them with technologies like optogenetics — using light to stimulate genetically modified neurons — could enable wireless, localized brain interrogation and control.

Used in this way, future generations of neural dust could transform how chronic neurological disorders are managed. They could also enable hardwired brain-computer interfaces (the original motivation behind this research), or even be used to enhance cognitive ability and modify behavior.

In 2013, President Obama launched the multi-year, multi-million dollar U.S. BRAIN Initiative (Brain Research through Advancing Innovative Neurotechnologies). The same year, the European Commission launched the Human Brain Project, focusing on advancing brain research, cognitive neuroscience and brain-inspired computing. There are also active brain research initiatives in China, Japan, Korea, Latin America, Israel, Switzerland, Canada and even Cuba.

Together, these represent an emerging and globally coordinated effort to not only better understand how the brain works, but to find new ways of controlling and enhancing it (in particular in disease treatment and prevention); to interface with it; and to build computers and other artificial systems that are inspired by it.

Cutting-edge tech comes with ethical questions

This week’s NAS workshop — organized by the Organization for Economic Cooperation and Development and supported by the National Science Foundation and my home institution of Arizona State University — isn’t the first gathering of experts to discuss the ethics of brain technologies. In fact there’s already an active international community of experts addressing “neuroethics.”

Many of these scientific initiatives do have a prominent ethics component. The U.S. BRAIN initiative for example includes a Neuroethics Workgroup, while the E.C. Human Brain Project is using an Ethics Map to guide research and development. These and others are grappling with the formidable challenges of developing future neurotechnologies responsibly.

It’s against this backdrop that the NAS workshop sets out to better understand the social and ethical opportunities and challenges emerging from global brain research and neurotechnologies. A goal is to identify ways of ensuring these technologies are developed in ways that are responsive to social needs, desires and concerns. And it comes at a time when brain research is beginning to open up radical new possibilities that were far beyond our grasp just a few years ago.

In 2010, for instance, researchers at MIT demonstrated that Transcranial Magnetic Stimulation, or TMS — a noninvasive neurotechnology — could temporarily alter someone’s moral judgment. Another noninvasive technique called transcranial Direct Current Stimulation (tDCS) delivers low-level electrical currents to the brain via electrodes on the scalp; it’s being explored as a treatment for clinical conditions from depression to chronic pain — while already being used in consumer products and by do-it-yourselfers to allegedly self-induce changes in mental state and ability.

Crude as current capabilities using TMS and tDCS are, they are forcing people to think about the responsible development and use of technologies which have the ability to potentially change behavior, personality and thinking ability, at the flick of a switch. And the ethical questions they raise are far from straightforward.

For instance, should students be allowed to take exams while using tDCS? Should teachers be able to use tDCS in the classroom? Should TMS be used to prevent a soldier’s moral judgment from interfering with military operations?

These and similar questions grapple with what is already possible. Complex as they are, they pale against the challenges emerging neurotechnologies are likely to raise.

Preparing now for what’s to come

As research leads to an increasingly sophisticated and fine-grained understanding of how our brains function, related neurotechnologies are likely to become equally sophisticated. As they do, our abilities to precisely control function, thinking, behavior and personality, will extend far beyond what is currently possible.

To get a sense of the emerging ethical and social challenges such capabilities potentially raise, consider this speculative near-future scenario:

Imagine that in a few years’ time, the UC Berkeley neural dust has been successfully miniaturized and combined with optogenetics, allowing thousands of micrometer-sized devices to be seeded through someone’s brain that are capable of monitoring and influencing localized brain functions. Now imagine this network of neural transceivers is wirelessly connected to an external computer, and from there, to the internet.

Such a network — a crude foreshadowing of science fiction author Iain M. Banks’ “neural lace” (a concept that has already grabbed the attention of Elon Musk) — would revolutionize the detection and treatment of neurological conditions, potentially improving quality of life for millions of people. It would enable external devices to be controlled through thought, effectively integrating networked brains into the Internet of Things. It could help overcome restricted physical abilities for some people. And it would potentially provide unprecedented levels of cognitive enhancement, by allowing people to interface directly with cloud-based artificial intelligence and other online systems.

Think Apple’s Siri or Amazon’s Echo hardwired into your brain, and you begin to get the idea.

Yet this neurotech — which is almost within reach of current technological capabilities — would not be risk-free. These risks could be social — a growing socioeconomic divide perhaps between those who are neuro-enhanced and those who are not. Or they could be related to privacy and autonomy — maybe the ability of employers and law enforcement to monitor, and even alter, thoughts and feelings. The innovation might threaten personal well-being and societal cohesion through (hypothetical) cyber substance abuse, where direct-to-brain code replaces psychoactive substances. It could make users highly vulnerable to neurological cyberattacks.

Of course, predicting and responding to possible future risks is fraught with difficulties, and depends as much on who considers what a risk (and to whom) as it does the capabilities of emerging technologies to do harm. Yet it’s hard to avoid the likely disruptive potential of near-future neurotechnologies. Thus the urgent need to address — as a society — what we want the future of brain technologies to look like.

Moving forward, the ethical and responsible development of emerging brain technologies will require new thinking, along with considerable investment, in what might go wrong, and how to avoid it. Here, we can learn from thinking about responsible and ethical innovation that has come to light around recombinant DNA, nanotechnology, geoengineering and other cutting-edge areas of science and technology.

To develop future brain technologies both successfully and responsibly, we need to do so in ways that avoid potential pitfalls while not stifling innovation. We need approaches that ensure ordinary people can easily find out how these technologies might affect their lives — and they must have a say in how they’re used.

All this won’t necessarily be easy — responsible innovation rarely is. But through initiatives like this week’s NAS workshop and others, we have the opportunity to develop brain technologies that are profoundly beneficial, without getting caught up in an ethical minefield.

Originally published at theconversation.com on March 31, 2016.