Can AI influence how you feel about someone without you knowing?

A new study suggests that it can

Imagine that you’re talking with someone over Zoom and, as the conversation goes on, you begin to feel that they really get you — to the extent that a strong bond of trust quickly forms.

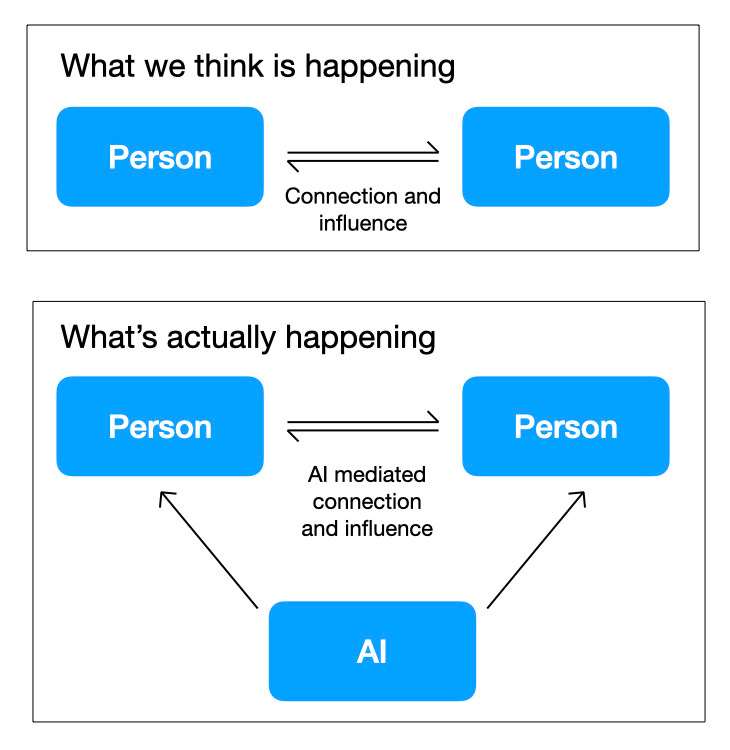

Now imagine that this sense of trust is the result of an AI that, unknown to either of you, is manipulating facial features in real time to influence how each of you feels about the other.

Disturbing as this seems, the possibility of using artificial intelligence to manipulate social connections in this way was demonstrated in a recent paper in the Proceedings of the National Academies of Science (also available for free here).

As I noted in this last week’s update to Future of Being Human subscribers, it’s a study that made me feel deeply uneasy, and one that raises serious questions around human agency in a world where AI can mediate how we feel about — and are potentially influenced by — others through digital media.

Interestingly, the research by Pablo Arias-Sarah and colleagues didn’t set out to study AI manipulation. Rather, they wanted to better-understand how social signals like smiles influence social interactions — something that’s still poorly understood.

Given the challenges of studying social interactions by manipulating people in real life without them knowing — a near-impossible task — the paper’s authors turned to AI. By using artificial intelligence to covertly alter social signals in real time on a custom-made video dating platform, they were able to tease out how participant smiles were directly associated with outcomes such as feelings of attraction.

Have you checked out the new Modem Futura podcast yet with Co-hosts Andrew Maynard and Sean Leahy?

In the experiment, thirty one heterosexual volunteers participated in four 4-minute rounds of video-based male-female speed dating.1 All of them were single and were willing to participate in an experiment where they would have the option to potentially connect with partners afterward.

However, none of them had any idea that this was an experiment where their facial features would be manipulated. And none of them were aware of this happening during their conversations.

Before each video call, participants were shown a photo of their partner and asked to to rate the likelihood of the other person wanting to see them again; the likelihood of them wanting to see the other person again; whether they thought the conversation would be pleasant and interesting; and to what extent the person in the photo was smiling.

After each video call, participants were asked the same questions, but this time they were focused on their actual interactions with the other person.

In this way, the researchers were able to isolate the impacts of manipulating smiles on how the volunteers felt about the people they were engaging with.

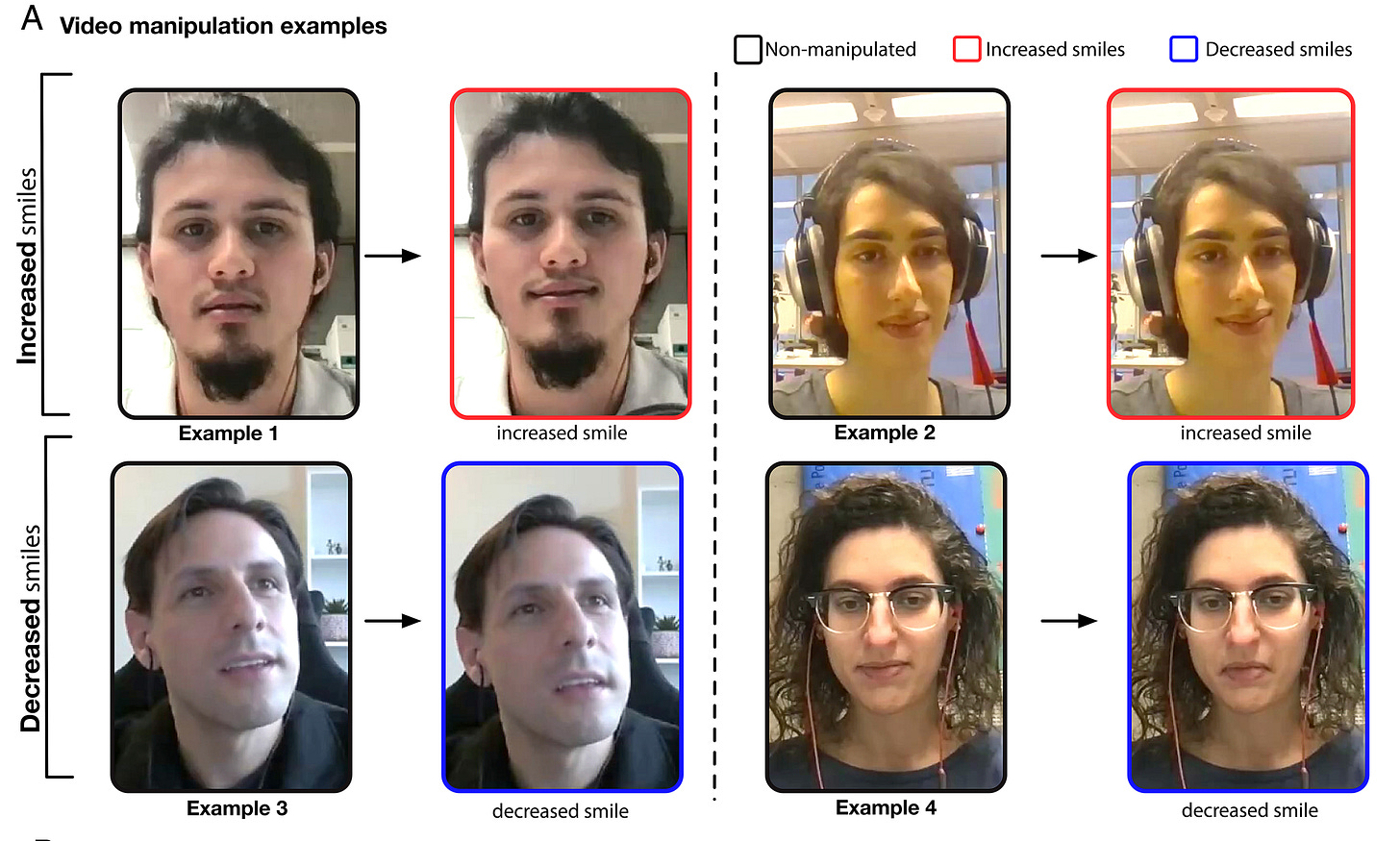

During each call, AI was used to manipulate the smiles of each participant in real time in three ways:

Making both participants appear to each other as if they were smiling at the same time;

Making both participants appear to each other as if they were not smiling at the same time; and

Making it look as if one was smiling and the other not smiling at the same time.

As you can see from the figure below — taken from the paper — the manipulations were subtle. But they still led to measurable effects.

Three significant findings stand out from the study in the context of AI manipulation:

First, participants judged the other person to like them more when their partner’s image was manipulated to smile more.

Second, where AI was used to increase the smiles of both participants simultaneously, participants reported feeling most attracted to each other.

And third, the conversations where observed smiles were simultaneously manipulated in the same way were rated as being of the highest quality. Interestingly, simultaneously reducing the smiles on each participant’s face led to a perception of higher quality conversation.

In other words, by using AI to manipulate the smiles of participants in real time, it was possible to affect how each person felt about each other and their interactions.

I should note here that the volunteers in the experiment were all primed for romantic connections, and so the findings may not be generalizable without further research.

It’s also important to underline that this all occurred across digital media — and in an environment where covert AI manipulation is possible. And while compelling, the level of manipulation within the experiment was relatively small compared to the wider range of signals that are potentially associated with social influence.

Even so, the implications for how AI may be used to alter how people feel about each other — and what this might mean for building trust and influencing thoughts, beliefs, and behaviors — are substantial.

What this study indicates (and again, it’s worth emphasizing that this was not its primary intent) is that, for digitally mediated interactions such as video and even audio, AI can potentially be used to exert hidden influence:

It’s by no clear at this point how far such AI-mediated influence could go — although, ironically, the methodology developed by the paper’s authors could help further-explore this.2

That said, even the possibility of AI tools being used to manipulate how we feel about others, how much we trust them, how willing we are to believe them, and how open we are to being influenced by them, is concerning.

What’s more concerning is that the ability to do this in relatively crude ways is available now, and the opportunities of doing it in more sophisticated ways are just around the corner.

We’re already seeing apps designed to alter expressions in photos, and as this study shows, the means are available now to make subtle alterations on the fly in video feeds.

As a consequence we’re facing the possibility of a near future where the body language of the people you’re digitally engaging with — as well as social signals related with voice pitch and modulation — may potentially be manipulated to nudge you toward responding in certain ways, either by those who control the AI tools, or by the AI itself.

This becomes more worrisome still when this ability is combined with multimodal AI platforms that can assess and respond to what participants are thinking and feeling in real time — and use this to steer interactions toward a desired set of outcomes.

The paper’s authors reflect similar concerns in their conclusion, noting that “A discussion about the regulation of transformation filters should take place at a societal level. This discussion should consider that transformation filters can be used by individuals to control how they are perceived by others, how they perceive others or, in the most dystopic scenario—which is the one studied in this article—to covertly control how third party individuals perceive each other.”

This is, if anything, an understatement given the rate at which AI-based technologies are developing. And it raises serious concerts around what responsible and ethical innovation looks like in this space — and the governance approaches that are needed to ensure it stays responsible and ethical.

There were originally 32 participants, but one of them wasn’t able to participate in each speed dating session. A total of 6 interactions between 31 participants were recorded.

The experimental platform which the researchers built to perform these studies online — DuckSoup — is available for free and in open-source format at https://github.com/ducksouplab/ducksoup

When digital mirrors are subtly warped, giving "curated connections"; authentic interactions become a luxury or a "segmented offering". Premium tiers incoming for masses😅

Good thoughts, Andrew.

I suspect most people will continue to proceed by American assumptions: their poverty is their fault, they just need to work harder.