Are educators falling behind the AI curve?

As tech companies release a slew of generative AI updates, there's a growing risk that educational practices and policies are struggling to keep up with new capabilities

It’s a common occurrence: A colleague asks me about students using artificial intelligence in class, and it turns out that their perceptions of what generative AI does and how students are using it are 18 months and a lifetime out of date.

Im exaggerating of course, but in a week where OpenAI, Google, and Apple, have all made major announcements around increasingly advanced uses of AI, the lag between what educators think is happening, and what is actually happening, is widening at a frightening rate. And this has implications all the way from the policies and pedagogies used by individual instructors, to institutional policies around the uses and misuses of AI.

The past few weeks have seen conflicting messaging around whether the seemingly exponential growth of AI capabilities in recent years is finally slowing down. There’s certainly a sense in some quarters — but by no means all — that advances in the large language models underpinning many generative AI platforms aren’t fueling the transformative leaps we were seeing a year of so ago. And this has led to some cynicism over projected AI futures — including its ability to bring about transformational leaps in education.

Yet this cynicism overlooks a considerable lag between what emerging models are capable of, and how this is being incorporated into real-world applications. And in the field of education, this lag is being amplified by outdated perceptions of both the capabilities of AI and how these are being utilized.

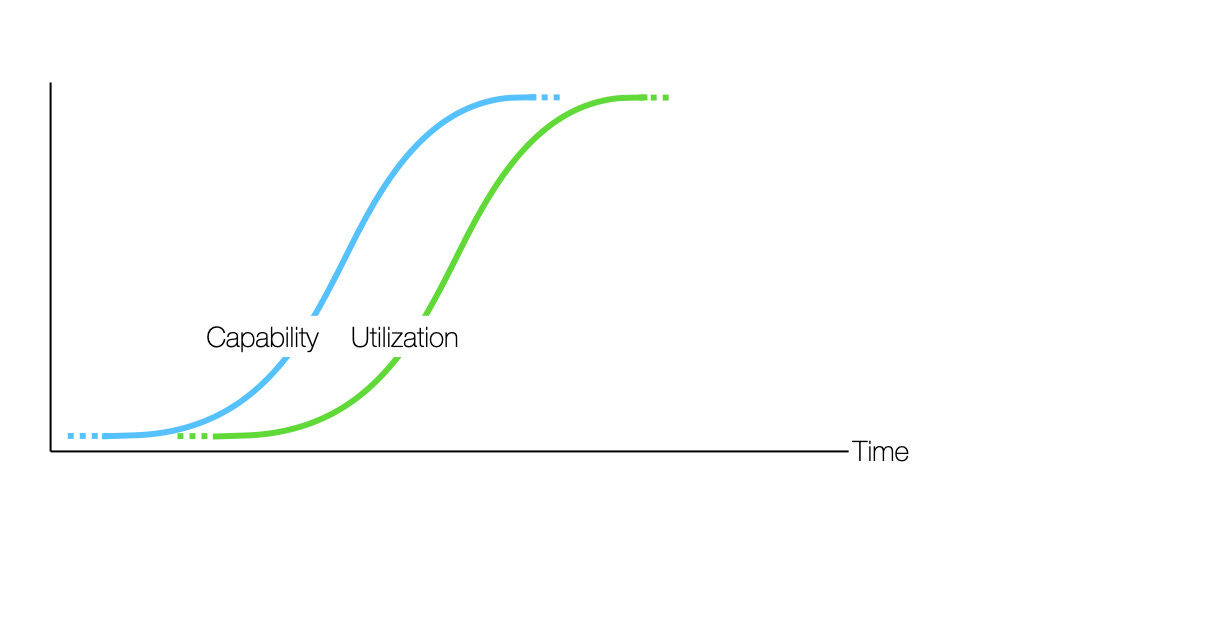

Although I’m sure some will disagree, there are indications that large language models are following a classical tech innovation “s-curve” — a curve that’s characterized by slow initial development followed by near-exponential growth, and finally a tailing off as new capabilities plateau out:

It’s a trend that Google’s CEO Sundar Pichai hinted at recently when he remarked “I think the progress is going to get harder. When I look at [2025], the low-hanging fruit is gone”.

But this is only part of the AI innovation picture.

As new technological capabilities emerge, there’s an inevitable lag between the potential of a new technology and how this translates into actual innovation — in other words, products, processes or services that lead to tangible value creation. This leads to a second “utilization” s-curve that reflects the growth of how a new technology is used, and it’s one that lags behind the capability curve:

Where both curves are steep, the gap between what a technology is possible of, and what’s actually being done with it, can be exceptionally large. And this is where we are with AI it seems — generative AI in particular. We’re at a point where progress at the cutting edge of AI is slowing down, while advances in how AI is being used are speeding up.

Have you checked out the new Modem Futura podcast yet?

We’ve seen this playing out this past week as companies have rushed to announce how advances in AI breakthroughs are being harnessed. These include new ways that Apple Intelligence is being implemented on iPhones, iPads and Apple computers; Google’s announcement of Gemini 2.0 and platforms that get users closer to true agentic AI; and OpenAI’s “12 days of OpenAI” where the company is releasing details of new capabilities on a daily basis — including the full version of its reasoning model OpenAI-o1, its video generation platform Sora, and Canvas — a new way to work collaboratively with ChatGPT.

None of these announcements reflect large strides at the cutting edge of what the underlying technology is capable of. But they do suggest that we’re entering a phase of rapid AI integration into useable tools.

And what is perhaps most striking here is a growing trend to embed AI in almost everything we use — a trend which marks a significant departure from the early days of text- and image-based generative AI tools.

Back in 2022 the public release of ChatGPT helped form and crystallize mental models of what generative AI is in many people’s minds. It was — according to these mental models — a tool that could produce sophisticated human-like prose in response to well crafted prompts that were seductive, persuasive, and — in the case of students using the platform to complete assignments — deceptive. But it was also a tool that had a tendency to make things up, and had plenty of “tells” that showed it was only superficially human-like, such as an excessive use of the phrase “in conclusion”.

These are mental models that, in my experience, many people still hold. And yet the AI of today — and especially how it’s being implemented and used — is a far cry from the AI of 2022.

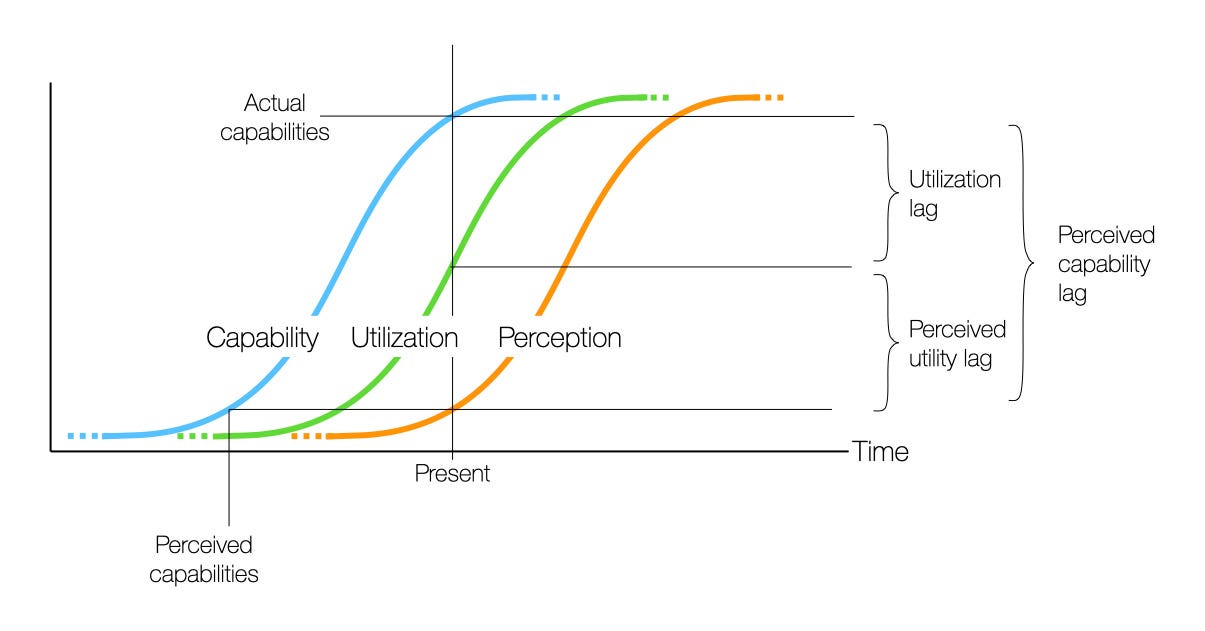

And this brings me to the third s-curve: the perception curve:

While AI capabilities (at least with the current iteration of underlying technologies) are reaching the top of their curve, and utilization is just beginning to take off in a big way, perceptions around what AI is and what it can do are lagging behind both, as exposures to early capabilities still inform many peoples’ ideas.

This is normal for a new technology. But where the lag is substantial and decisions are being made on assumptions that are out of date, it can create problems. And this is where I worry that educational practices and policies are lagging far behind current AI realities.

This lag is reflected in this week’s slew of AI announcements. While many people I work with still think of generative AI as being an app that you fire up, give a prompt to, and receive a response from, the generative AI of today is much closer to having a smart, engaging, and supremely patient artificial person integrated into everything you do.

AI is no longer something that’s so isolated from everyday life that we have to make a conscious effort to engage with it. Rather, it’s a technology that is increasingly becoming embedded into our lives, whether we’re engaging with it through Alexa, Siri or other AI assistants, verbally chatting with AI personas our phones, using it to fast-track just-in-time and on-the-go learning through apps like Perplexity, or relying on it when writing everything from emails to essays, or coding, cooking, or even just thinking about and noodling with new ideas.

If, as educators, our mental models are stuck in 2022 and we have no conception of what the AI of the present is like, there’s a danger that we’ll go down a path of implementing policies that simply do not reflect what students need to know, what they already know, and how they’re already using artificial intelligence.

The reality is that the real-world applications of AI today are massively more sophisticated than they were a couple of years ago. They are more accurate and less prone to hallucinations. They are more able to provide complex responses through long conversations. They are capable of emulating reasoning. Low latency and highly realistic voice interfaces are opening up new and transformative use-cases. And they are increasingly being integrated so deeply into apps and products that it’s sometimes hard not to use them (or even to know when you are).

Across all of these advances, students are learning through creative thinking and hands-on experience how to extract value from emerging AI tools and capabilities far faster than most of their instructors are.

This lag between perception and reality is a problem if decisions are being made and policies put in place that don’t reflect current AI capabilities and utilization. It’s a lag that both calls into question what students are being taught about how to thrive in an age of AI, and how they are penalized if they don’t confirm to outdated expectations.

Unfortunately, addressing this lag is not straight forward. One approach that some institutions are taking is to incorporate AI literacy into their curricula. It’s an approach that makes a lot of sense — but only if what is taught keeps up with the rapid pace of AI innovation and integration.

This is a challenge where the world is changing faster than it typically takes to incorporate new material into teaching.

One way around this is to focus on how to think about the development and use of AI within society, rather than the nuts and bolts of what the technology is capable of at any given point. This might include covering foundational ideas around responsible and irresponsible development and use of AI, developing an understanding of the complex intersection between AI and society, being aware of critical failure modes that are likely to persist across multiple generations of AI, and fostering an ability to parse and navigate the shifting limitations and affordances of AI tools.

My sense here is that this will depend on nurturing new ways of thinking about AI that are agile and adaptable. The same goes for policies and processes around AI in education.

For instance, academic integrity policies that are built around how people were using ChatGPT in 2022 are likely to turn out to be highly brittle as students embrace new technologies like NotebookLM, ChatGPT’s voice mode, Apple Intelligence, and a range emerging agentic capabilities. Similarly, policies that are based on black and white ideas of what AI is, what it does, and how it’s being used, are likely be out of date before they’re implemented.

The question then is whether practices, processes, and policies can be developed that are agile, that focus on appropriate behavior that’s independent of a particular AI technology, and that help support a mindset that’s grounded in responsible as well as beneficial use.

I’m not sure what these might look like — although there’s a wealth of insights that I suspect could be drawn from work around agile governance. But I can imagine starting with guidelines on how to think about AI, and when and how to use it smartly.

This is an approach that’s reflected in a recent article by Ethan Mollick on “15 Times to use AI, and 5 Not to.” I found his approach to be helpful as it focuses on good practices that are less likely to change as AI capabilities and uses evolve.

Ethan’s list includes things like using AI for work that requires quantity, work where you’re a subject expert and can assess responses, work that will keep you moving forward, work that is mere ritual, and work where you want a second opinion.

And in the “not to” list, he includes not using AI when you need to learn, when you don’t understand an AI platform’s failure modes, and when the effort’s the point.

Ethan’s list isn’t easy to fold into rigid practices or black and white policies. But it does, I think, point toward a mindset that can begin to overcome the lag between capabilities, utilization, and perceptions around AI.

And this, I suspect, is a useful place for educators to start as they catch up with a rapidly changing AI landscape which, in many cases, has long surpassed their ideas of what AI is, and how students might be using it.

As ever, we will end up writing policy for the (fictional) world model we've constructed in our minds. For slow-moving/static endeavours, our mental models are generally pretty good reflections of reality. For fast-moving technology though, as you point out, this is less so.

I feel an even greater sense of concern as I reject the idea that progress will suddenly plateau (as fashionable, and palatable, as it is); AlphaProof/AlphaGeometry, o1, QwQ-32B, etc. are early exemplars of the power of flexible inference-time compute. This, alone, is a paradigm that still has room to scale. (That said, the ability to ascertain the difference between a machine with a human-equivalent IQ of 250, and 350, for the average human, is less obvious!)

I still find many campuses struggling to come up with a strategic response to AI at all.