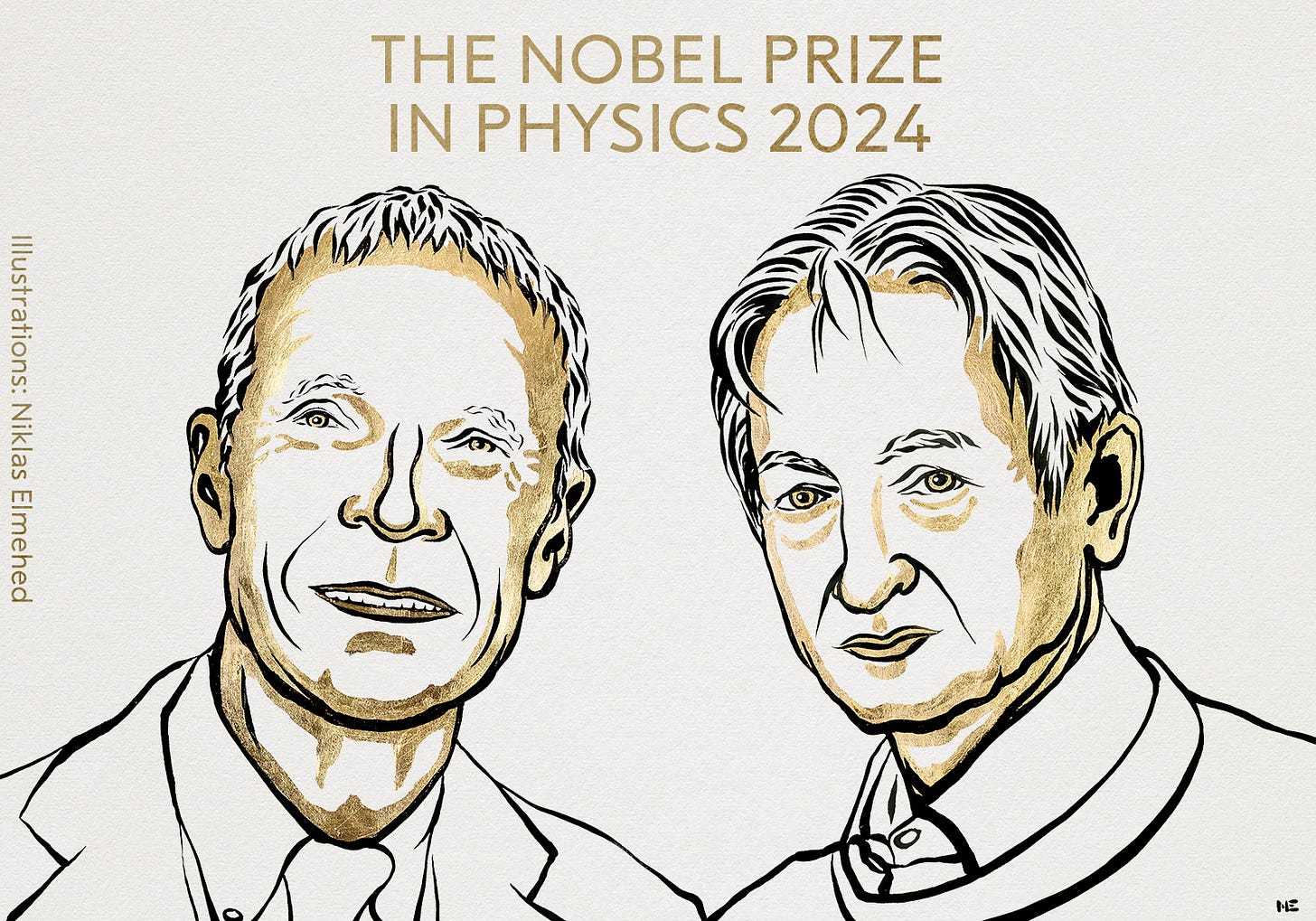

AI captures this year's Nobel Prize for Physics

AI researchers John Hopfield and Geoffrey Hinton have just been awarded Nobel Prize in Physics for their pioneering work on artificial intelligence

It might seem obvious that, with AI seemingly dominating emerging tech at the moment, someone was going to get a Nobel Prize for their work on artificial intelligence.1

But it isn’t — primarily because there isn’t a clear Nobel Prize category to slot computer science and technology innovation into.

Which was why it was so exciting to hear this morning that two pioneering AI researchers — John Hopfield and Geoffrey Hinton — have just been awarded the Nobel Prize in Physics for their ground breaking work in AI.

The prize was awarded for Hopfield and Hinton’s work on applying their understanding of physical phenomena to the development of neural networks that are now the basis for generative AI and a lot more besides.

It’s worth reading the scientific background paper published by Nobel Committee for Physics to get a sense of how Hopfield and Hinton’s research and insights led to advanced in neural networks and machine learning. It illustrates how inspiration from physics led to computational architectures that mimic biology in an attempt to emulate processes more usually associated with cognitive science (there’s also a more accessible explainer article available).

What the paper shows is that, rather than simply an impressive feat of engineering or coding, emerging AI systems are rooted in transformative leaps in understanding that are rooted in a deep understanding of science, as well as a willingness to extend that understanding across disciplinary boundaries in creative ways.

John Hopfield is a theoretical physicist and a leading expert in the field of biological physics. His work in the 1970s focused on electron transfer between biomolecules and error correction in biochemical reactions. But this was part of a larger interest in collective phenomena in physical systems.

As the background paper explains:

Collective phenomena frequently occur in physical systems, such as domains in magnetic systems and vortices in fluid flow. Hopfield asked whether emergent collective phenomena in large collections of neurons could give rise to “computational” abilities.

This curiosity led to a novel architecture for neural networks that was designed to mimic the pattern-recognition capabilities found in biological systems.

In the 1980’s Geoffrey Hinton and others extended Hopfield’s work by bringing a more probabilistic or “stochastic” approach to pattern recognition using neural networks.

His “Boltzman Machine” — inspired by statistical mechanics — was a precursor to modern day deep learning, and allowed neural nets to go beyond pattern recognition to pattern generation within set boundaries.

Again, from the background paper:

The Boltzmann machine is a generative model. Unlike the Hopfield model, it focuses on statistical distributions of patterns rather than individual patterns.

At the time, available computational abilities limited the application of Hopfield and Hinton’s work. But massive advances in compute power over the past decade, along with associated advances in deep learning, have built on these foundations to create the basis for today’s generative AI systems.

It remains to be seen how much can be built on these foundations before the next set of step-change breakthroughs are needed to take AI to the next level. I worry that simply building on these foundations without creating new ones won’t get us too much further — but I may be wrong there.

This, though, raises the question of whether we’re at a point where AI is capable of generating similar leaps of scientific intuition (especially when they transcend disciplinary boundaries) — and the possibility of AI reaching the point where it can create the foundations of the next generation of “thinking” machines.

The evidence so far points to AI being capable of revealing associations and patterns that are non-intuitive, but not capable of understanding the significance of these.

As engines of the precursors to scientific intuition that allow humans to make breakthroughs, this could be profoundly transformative — although I do have to wonder what will happen when the Nobel committees have to grapple with breakthroughs that were aided and abetted by AI.

But without awareness and consciousness, AI will still be a generator of ideas, not an understander and implementer of ideas.

But this brings us back to the work of Hopfield and Hinton — and Geoffrey Hinton in particular — and the nature of scientific intuition.

What is scientific intuition, if not a non-linear way of using pattern recognition to solve complicated problems through sophisticated solution minimization processes (i.e. making non-obvious connections and identifying patterns that allow the identification of optimal solutions to problems in a multidimensional space — although this sounds just as convoluted as the original!)

And if this is what it is, is the idea of machine-based scientific intuition so much of a stretch?

This is likely part of the reasoning that led Geoffrey Hinton questioning the safety of emerging AI systems in 2023, and something that’s led to him being increasingly vocal about the possible dangers of advanced AI.

Of course, AI awareness is still something of a metaphorical wrench in the machine here, although just as it can be argued that AI could develop a sense of scientific intuition, it can be argued that there’s no fundamental reason why awareness couldn’t also emerge within a sufficiently complex artificial system.

And this is where this Nobel Prize becomes particularly interesting. It was leaps of scientific intuition which transcended formal scientific disciplines that led to the breakthroughs behind today’s announcement. And this begs the question: are we investing enough in the exploratory and serendipitous science around AI, neuroscience, consciousness, discovery, creativity, and a whole lot of other areas, to stimulate similar non-linear breakthroughs? Or are we so obsessed with the mechanics of technology innovation these days that we’re unlikely spot the less obvious patterns that lead to transformative breakthroughs.2

Or — and this is where things get really interesting — have we created the foundations of a technology that will end up achieving this despite us — beating us at our own intelligence game and exceeding our ability for serendipitous discovery.

And if we have, where will this lead us?

The day after this was published the Nobel Prize in Chemistry was awarded to David Baker, Demis Hassabis and John M. Jumper for their work on protein structures and design — Demis and Jumper work with Google DeepMind and were joint awardees for their work on AI and protein, while David’s work has drawn on DeepMinds work on their AI platform AlphaFold.

I don’t think this is the case — for instance the Canadian Institute for Advanced Research (CIFAR) Learning in Machines and Brains program has been highly influential in the development of deep learning architectures and pushing the capabilities of AI — it’s also a program that Geoffrey Hinton as well as AI luminaries like Yan LeCun and Yoshua Bengio have long associations with. But there is a danger that we focus so much on near term advances in AI that we fail to see the intellectual heavy lifting that’s needed to fuel future advances.