Four more ways of thinking about advanced technology transitions

A simple threat/opportunity framework for thinking about approaches to navigating advanced technology transitions

Last week I introduced four simple ways of thinking about advanced technology transitions, using the example of “Pippard’s Ladder.” This week I wanted to talk about a second, complimentary framework for conceptualizing approaches to navigating advanced technology transitions.

It’s a framework that I’ve been playing with for some time now, and was part of the same keynote I gave at the IEEE International Symposium on Consumer Technology where I talked about Pippard’s Ladder.

As in the previous article, the framework is quadrant-based. But this time it’s focused on identifying pathways to realizing opportunities and avoiding threats as society is transformed by emerging technologies.

The starting point takes inspiration from our work over the years on Risk Innovation. This is an approach that conceptualizes risk as a threat to value where the value bucket can encompass a multitude of dimensions. For instance, the value at risk might be human or environmental health. But it could just as easily be identity, dignity, or deeply held beliefs.

Here, “value” should not be confused with “values” — while “values” represent what is considered to be good or bad, “value” represents the worth of something to someone, whether that is measured in time, money, health, security, dignity, access to resources, or a whole range of other ways.

The point is, “value” represents something that can be lost or gained in a risk innovation approach to making decisions.

This emphasis on what is of value or worth to an individual, community, or society, makes Risk Innovation an extremely flexible tool for conceptually navigating complex risks and benefits. In particular, it allows risk to be approached as a balance between maintaining existing value, and enabling the creation of future value.

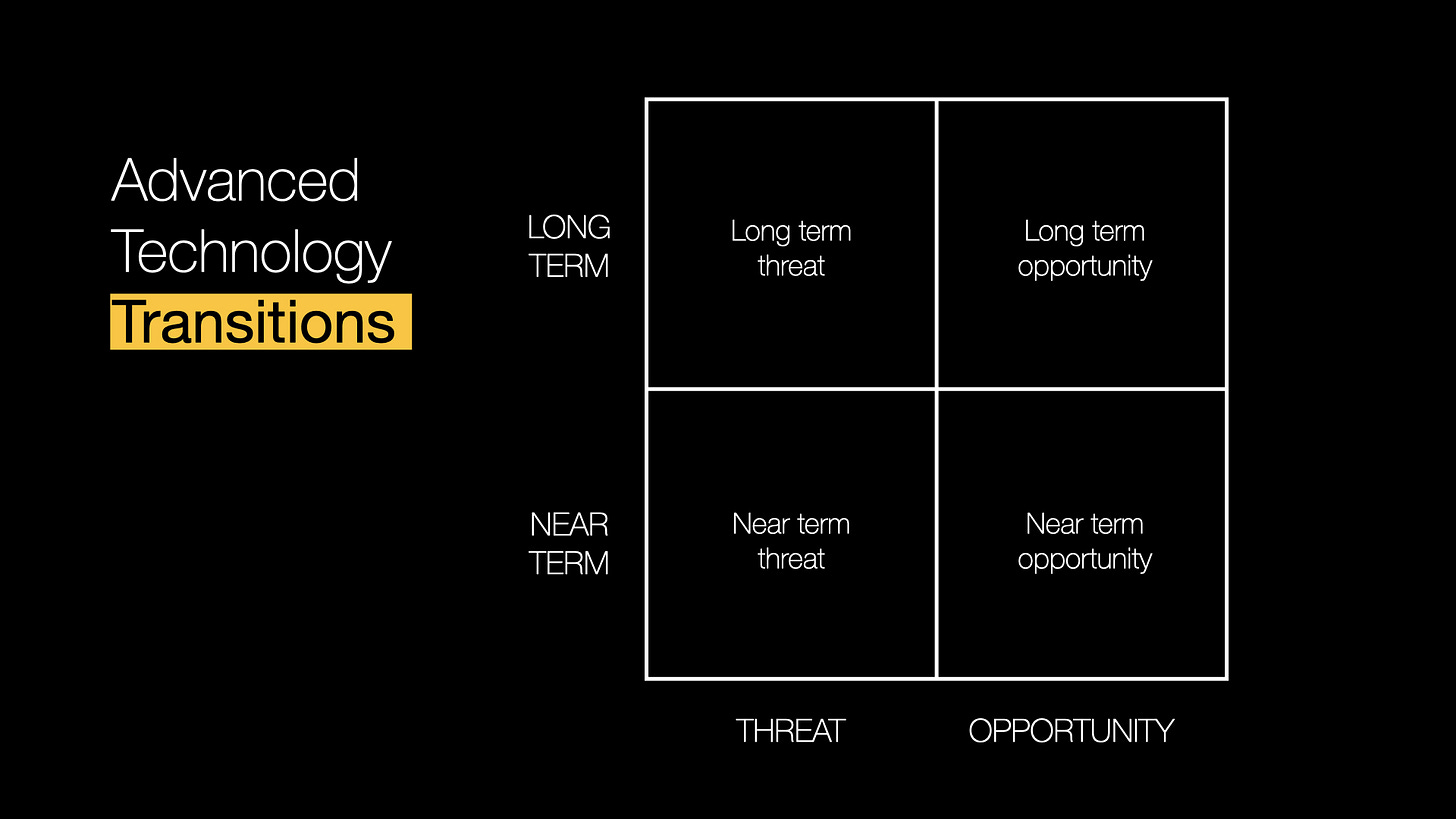

Some time ago, I began to play with how this might translate to a very simple quadrant framework for thinking about navigating advanced technology transitions. The result was a framework that considers near and far term threats and opportunities associated with technology transitions:

This is a blindingly simple framework — it’s definitely not rocket science! But it is one that offers surprisingly sophisticated insights when applied to specific cases.

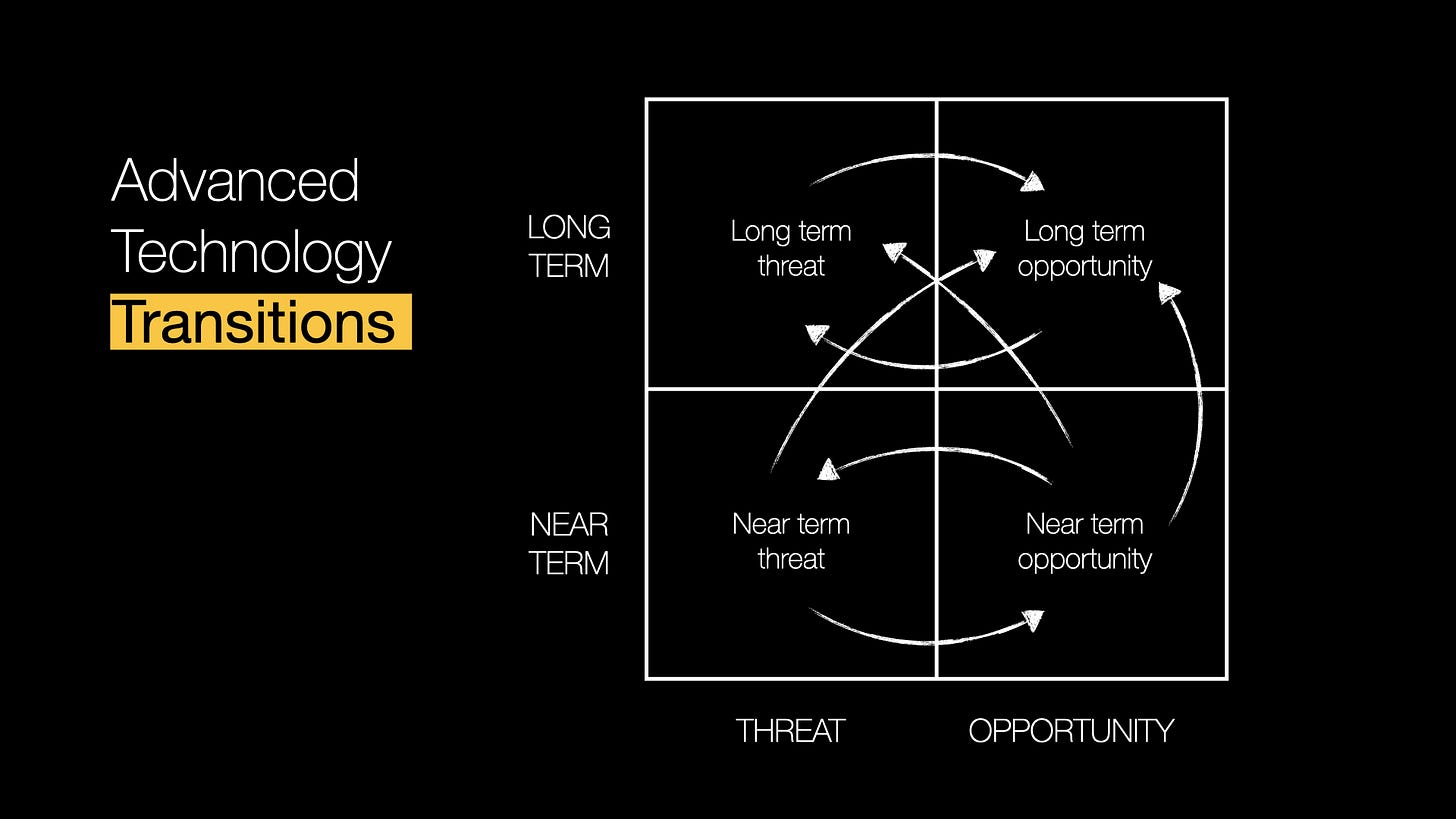

This becomes apparent when you begin to ask how the chances might be reduced of actions that lead to loss of value, and the chances of those that increase value elevated:

To illustrate this, the following are three hypothetical examples that draw from the potential impacts of generative AI on learning, the use of AI in discovery, and the impacts of AI and other data-based technologies on social cohesion.

Each is illustrative only, and is designed to show how the framework might be applied. And while all directly address AI, they are simply examples drawing on one advanced technology out of many that could be addressed using this approach.

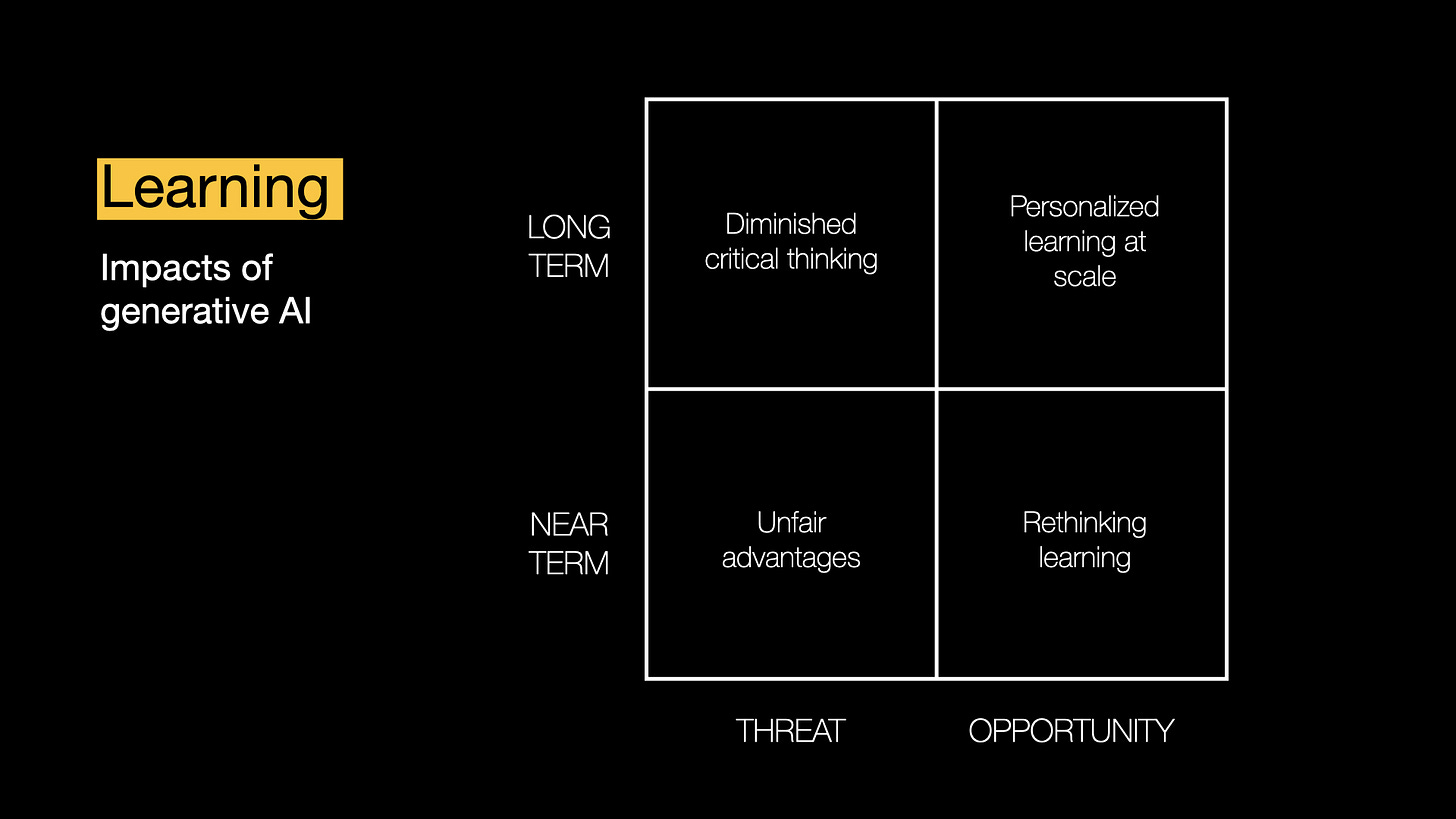

Generative AI and Learning

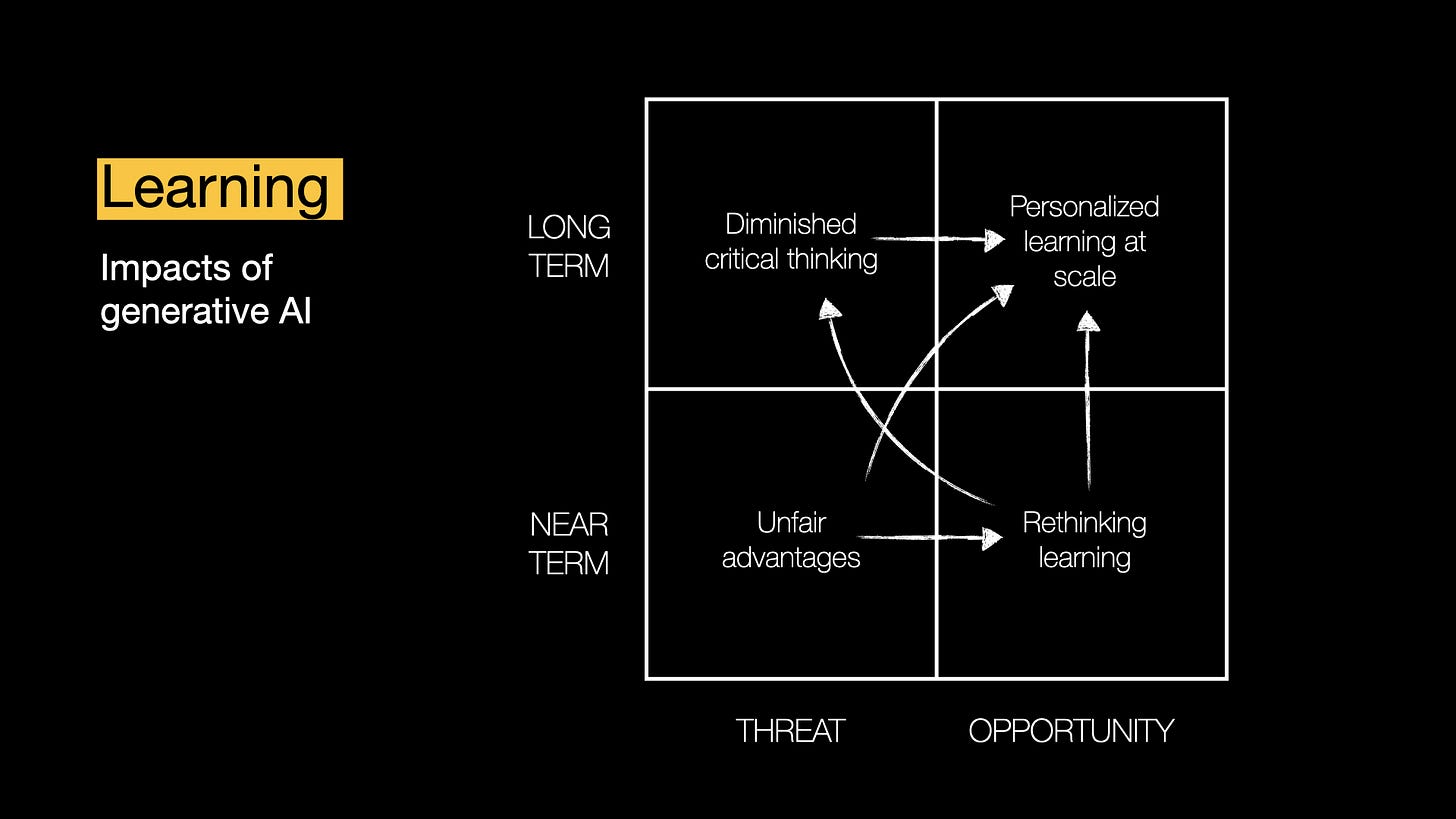

Applying the framework to generative AI and learning, one possible set of near and far term threats and opportunities include unfair advantages (near term threat), rethinking approaches to learning (near term opportunity), a reduction in the ability of students to think critically (long term threat), and the possibility of personalized learning at scale (long term benefit):

Faced with these scenarios, it’s intuitive to ask how organizations and communities might navigate from threat quadrants to opportunity quadrants, while reducing the chances of moving the other way.

For instance, mechanisms that move society from unfair advantages to rethinking learning in the near term, or bringing about personalized learning at scale in the long term, would seem to be worth pursuing. Similarly, mechanisms that prevent slippage from personalized learning at scale to diminished critical thinking would also seem to be useful to explore:

Once pathways of interest like these have been identified, it becomes possible to explore mechanisms that either open up or shut down these pathways. These in turn form a starting point for exploring strategies for navigating very specific advanced technology transitions.

In this example, a handful of mechanisms can be identified and attached to specific pathways (shown below). These include the development and application of checks and balances, the use of responsible innovation and principled innovation, changes in how people think about and conceptualize learning in an AI-dominated world, and adopting human-centric innovation:

These mechanisms are far from exclusive. But they do illustrate how, even within a very simple model, it becomes possible to move toward identifying and operationalizing approaches to navigating threats and opportunities within a specific technology transition.

AI and Discovery

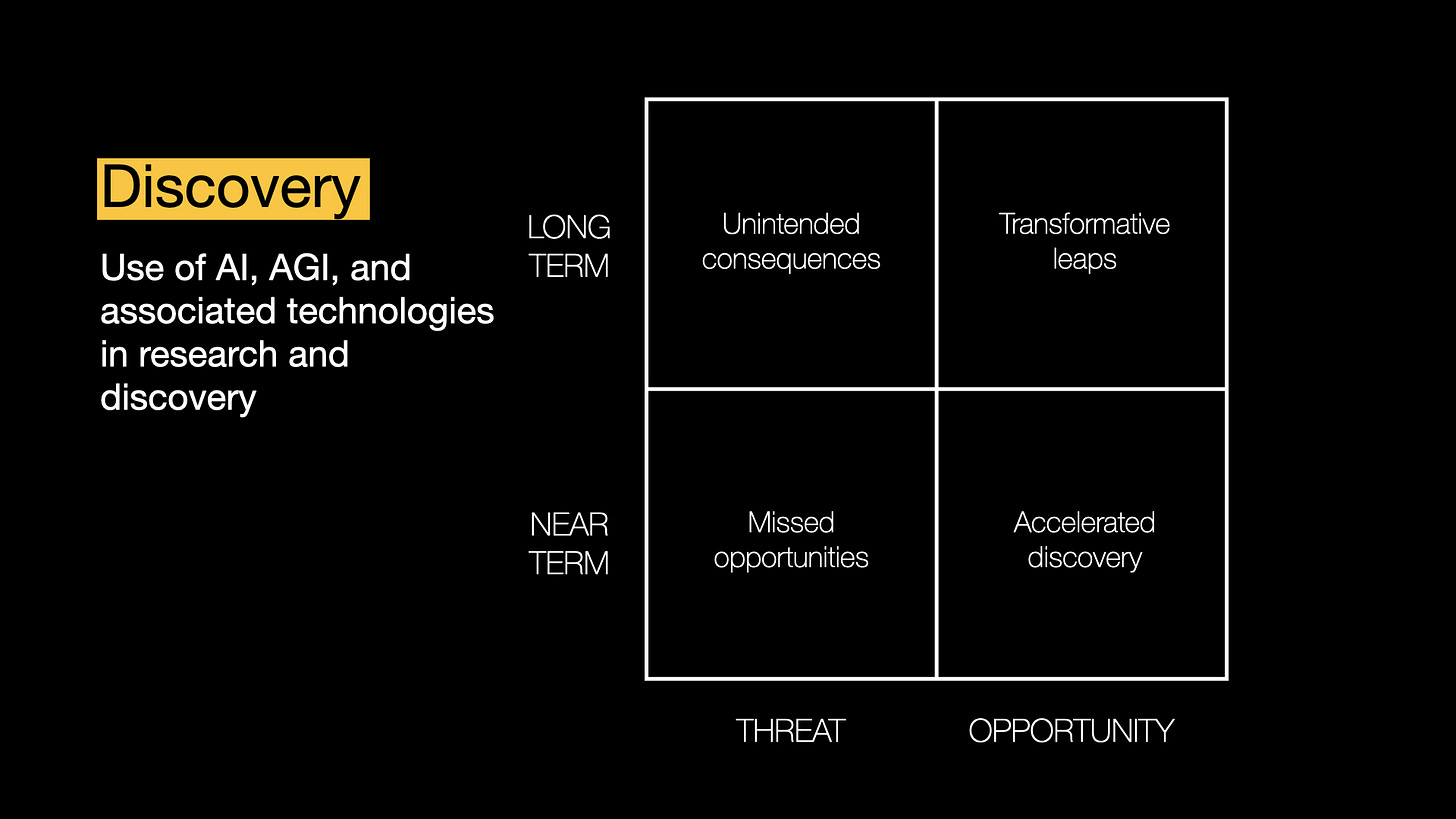

The second example here considers the use of artificial intelligence in the process of research and discovery. As reports like the recent one from the World Economic Forum on emerging technologies have highlighted, AI is already accelerating the rate of research and discovery. But there are also potential threats to be avoided here.

Applying the quadrant framework here, we might consider missed opportunities as a near term threat (such as loss of future value through not adopting new technologies), accelerated rates of discovery as a near term opportunity, complex and hard to predict unintended consequences as a long term threat, and transformative leaps in what is known and what we can achieve as a long term opportunity:

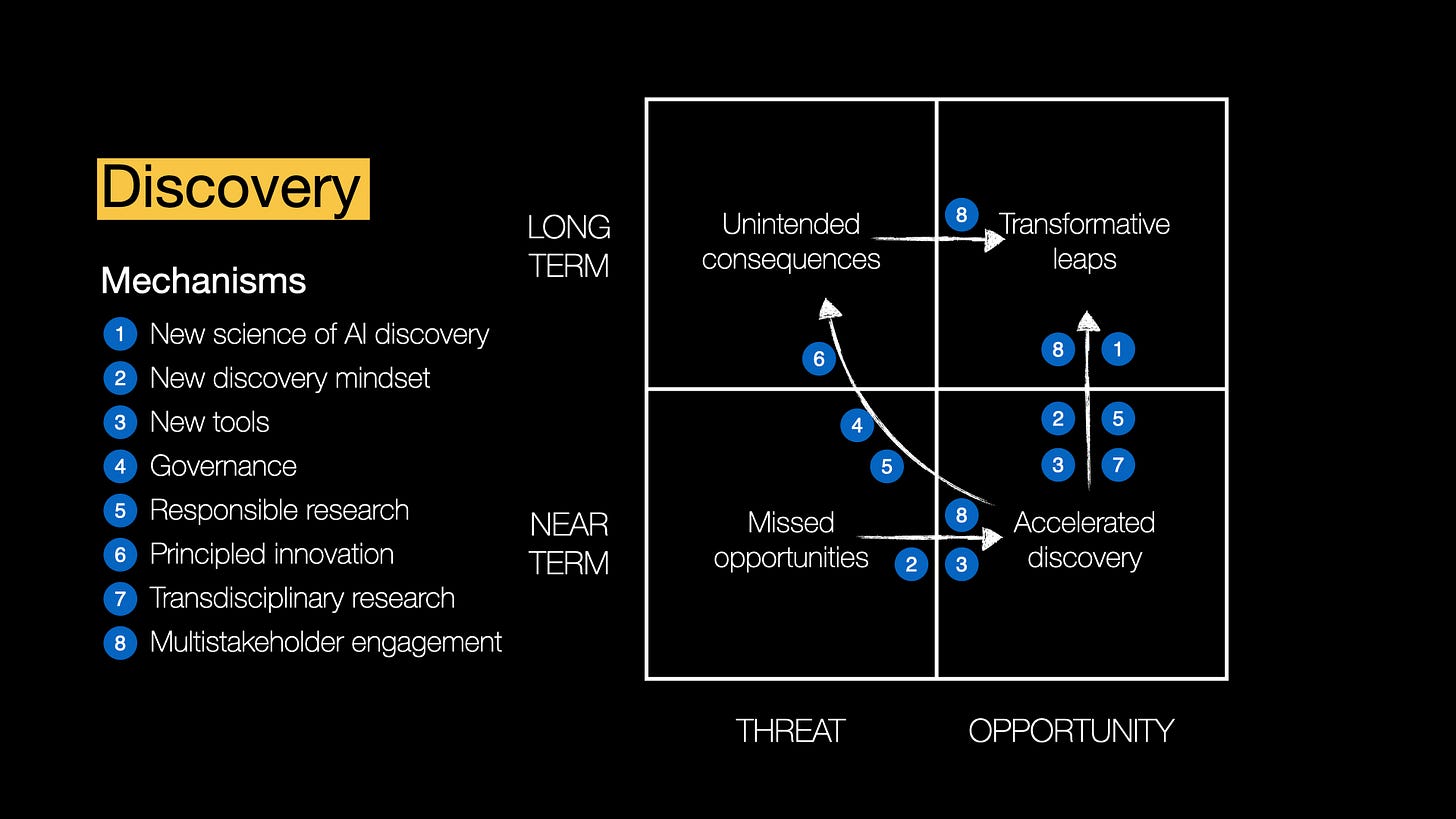

As with the example of generative AI and learning, there are both desired and undesired pathways that connect these quadrants, and mechanisms that potentially allow these pathways to be successfully navigated:

Again, this is a limited set of mechanisms. But it illustrates the point. Some of these echo mechanisms identified for the learning scenario above. But others are different — the need for effective governance mechanisms for instance, as well as new ways of thinking about research, and an emphasis on research that transcends conventional disciplinary boundaries.

Multistakeholder engagement emerges as a mechanism in this framework that is associated with each of the four identified pathways. This reflects the reality that there are both risks to pushing forward with transformative research without engaging with people who might be impacted, and advantages to working closely with communities who stand to gain from societally responsive research and discovery.

Both of these are seen repeatedly in the trajectories that emerging science and technology take. For instance, progress around gene-based research and technology has been impeded in the past by a lack of effective multistakeholder engagement, while research into disease treatment — cancer especially — has benefitted from such engagement.

As new technologies become more powerful and impactful across society, multistakeholder engagement is likely to become increasingly important as a mechanism for successfully steering progress toward the right hand quadrants. But it is just one of many mechanisms that will help ensure positive transitions.

AI and Social Cohesion

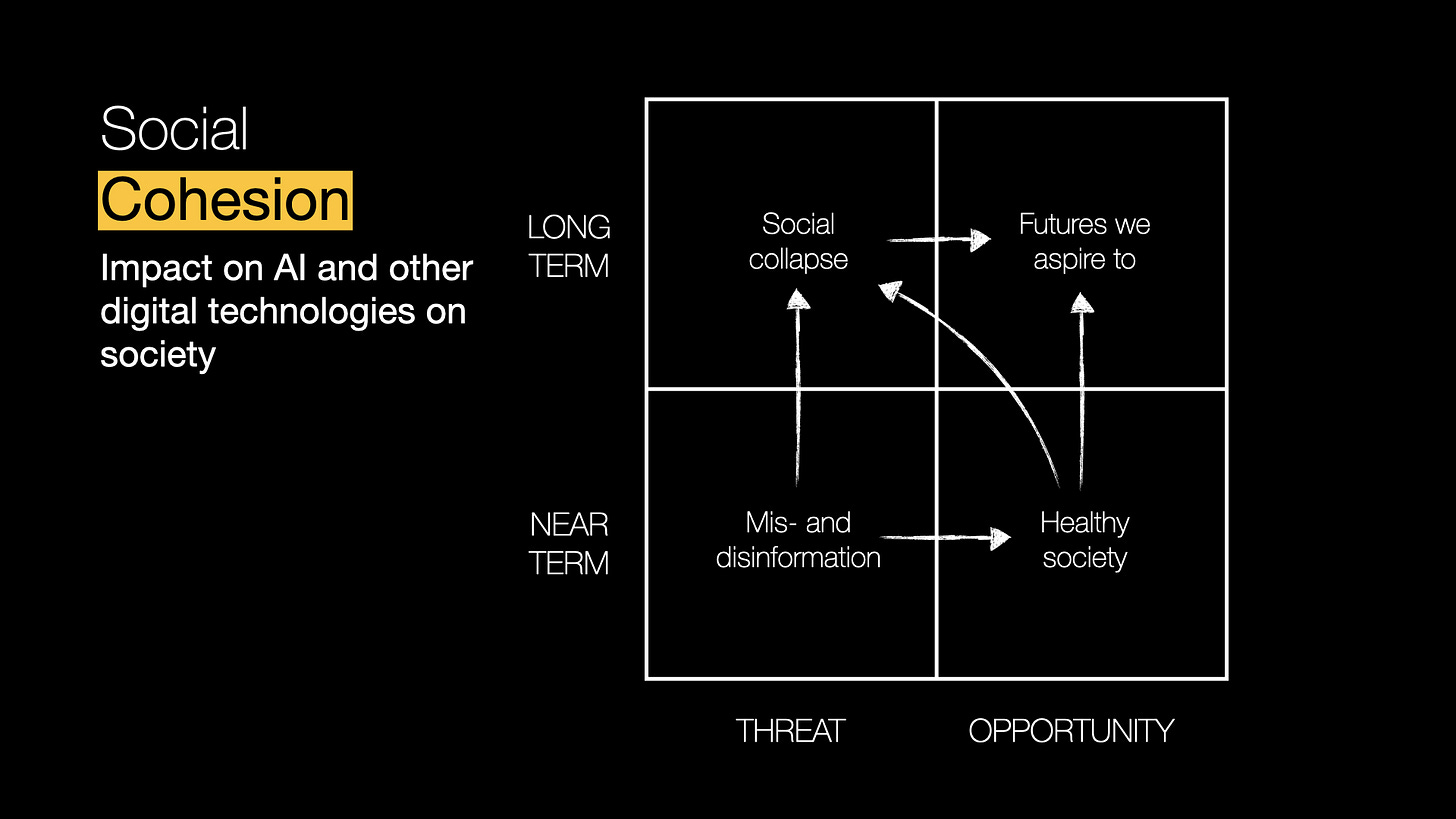

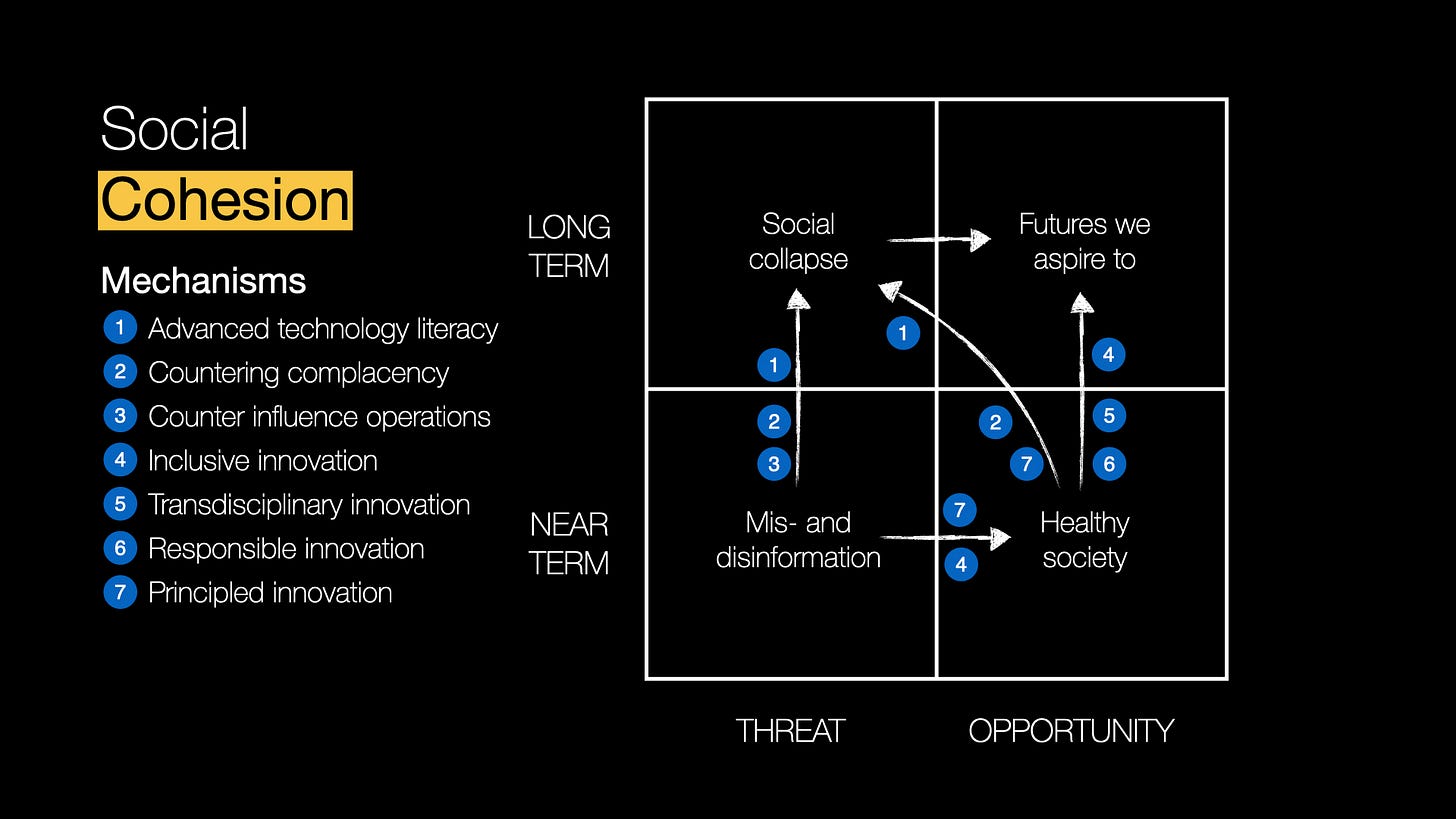

The final example here considers the highly topical issue of social cohesion in a world where AI and other digital technologies have an increasingly outsized impact on social dynamics and decision making.

As with the two previous examples, the threats and opportunities here represent a subset of a much larger list. But they are are all issues that have been discussed in the context of social media, AI, and other digital technologies.

And so we have misinformation and disinformation as a near term threat, a healthy society as a near term opportunity (with digital technologies enhancing individual and community decision making and health), social collapse as a somewhat dystopian long term threat, and positive AI-augmented futures as a long term opportunity.

As before, identifying these threats and opportunities allows pathways to be identified that link them, and mechanisms that potentially help navigate these pathways:

Once again there is some commonality between these mechanisms and those in the examples above, including the use of responsible innovation, principled innovation, and taking a transdisciplinary approach to navigating threats and opportunities.

But there are also some mechanisms that are unique to this particular context. These include increasing literacy around advanced technologies that leads to informed decision making, countering complacency where people unthinkingly go with the flow of a new technology until it’s too late (or simply allow technology entrepreneurs to make critical decisions), and actively developing counter influence operations where AI and other technologies can lead to the propagation of harmful ideas, understanding, and perceptions.

Not Quite a Tool Yet, But …

The framework above is still a work in progress. It’s one that I think provides insights into navigating advanced technology transitions that are otherwise hard to grasp. And it lays a foundation for moving from rather nebulous threats and opportunities to operationalizing mechanisms to avoid the former while embracing the latter.

It needs more work and testing. But I would be interested to see how useful it is when applied to very specific cases of transformative new technologies being developed and introduced, and how it might be further developed into a tool that informs decision making around advanced technology transitions that aren’t navigable through conventional understanding and means.

Now i loved reading this on my Monday morning to get the right form of "dopamine":)

Makes me wonder, how might this framework be applied at the intersection of digital divide for equitable access to advance technologies across the globe while mitigating the "arbitrage" element which global north potentially takes advantage of, from global south?

This also makes me think of adaptation to swarm intelligence with national security implications.

I think in essence, I'm now wondering about the inherent choke points of both emergence and chaos and the interplay with the transitions.

But then again, that's above my cognitive pay grade/competence 😅

Andrew, this is a super post (as expected). I wonder how you think about widening the lens...? I mean, we can look narrowly at the relation between Gen AI and 'learning' (recognising that can mean different things). This will offer something like a decent view of 1st, 2nd, and maybe even 3rd order effects in a narrow sense. But all of this stuff is deeply interconnected and interdependent. All technology is innately ecological... Technology, especially stuff like this, is deeply axiological (perhaps even ontological, if we take it further as some have). I'd love to see something like a systems view added to this approach (I try to do this with different approaches to consequence scanning. It's not easy, and is often limited by 'practical stuff' like budget, people involved etc.). IMO this would add a lot of richness to the process itself, and likely deliver deeper insights that support more holistically responsible development and use (where the development itself is in fact normative in some way).