Advanced AI is creating challenges for Native American communities – but also opportunities

Three experts explore the complex intersection between Artificial Intelligence and U.S. indigenous communities

A couple of months or so ago my colleague Sean Dudley mentioned that he’d been working with the AI image-generation platform Midjourney to generate images of Native American towns — and was shocked by what he discovered.

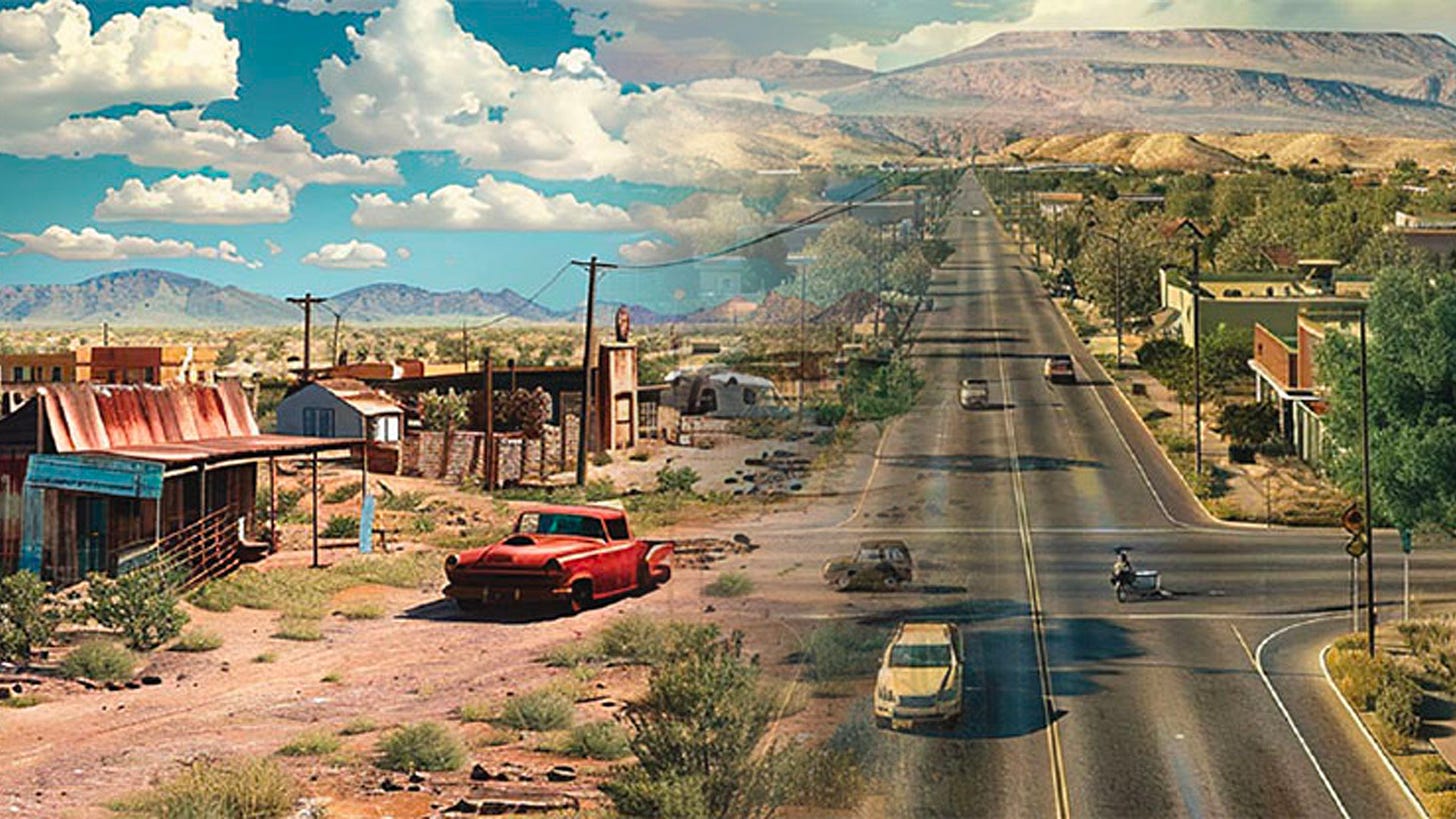

While renderings of a typical American Southwest desert town looked reasonably modern, the same prompt when applied to Tuba City — the largest community in the Navajo Nation – looked more like a run down shanty town (and nothing like the real Tuba City).

The contrast — shown in the image above — is a stark reminder of the biases that still plague generative AI platforms and disadvantage minority groups and communities. And yet, as Sean and his co-author Al Kuslikis explore in a recent article in the journal Tribal College (the journal of American Indian higher education), the news isn’t all bad.

Dudley and Kuslikis’ essay “Opportunity and Risk: Artificial Intelligence and Indian Country” is a must-read for anyone concerned with how the very real bias and misrepresentation in AI platforms impacts minority communities. But it’s also a call to action for ensuring that the equally real potential of AI is also fully realized in these communities.

To explore these risks and opportunities further I asked Sean and Al to reflect on the article and their own perspectives on AI and Native American communities. I also asked good colleague and former student Leonard Bruce to provide some insights from his own perspective as a Native American and member of the Gila River Indian Community.

Together, the reflections below provide a unique insight into the need to be more inclusive and imaginative if we’re to ensure emerging AI positively impacts all lives without disadvantaging — inadvertently or intentionally — those in minority groups and communities.

Al Kuslikis

Al Kuslikis recently retired from the American Indian Higher Education Consortium. Much of his focus at AIHEC was on Tribal College and University capacity building in STEM education and research, emphasizing collaborations that prioritize the colleges’ Tribal nation-building goals.

The opportunities and risks associated with AI have been and need continually to be explored and discussed, as AI systems pervade more and more of the world we experience. A particularly important question, to my mind, is the balance between what I’ll call disruptive and regenerative aspects of AI.

We are seeing how disruptive AI, especially coupled with other technologies like robotics, is transforming how we learn, how we interact with each other, how we engage the job market, how we navigate transactions of all kinds that are managed and protected. And we see the power of AI-driven social media to influence public discourse, attitude formation and information sharing on a global scale.

Disruptive technologies drive social change/evolution in ways that can create discontinuities between previous and new practices, challenging us individually and collectively to respond in ways that are adaptive and that minimize destabilizing stresses to whichever system is involved.

Regenerative AI, to borrow a term from ecology, facilitates homeostasis with respect to the relationships that allow the system to endure. Rather than creating (or capitalizing on) vulnerabilities within the system, it reinforces (and possibly optimizes) relationships, interactions, connections, and ways of knowing that support growth and stability of an existing system – or support transitions that continue a certain historical trajectory. It might be more useful to consider that the disruptive or regenerative characteristics of an AI system can be a matter of perspective. AI-generated decision-making support, for example, is disruptive in cases where local groups or communities are able to identify and implement solutions without depending on external expertise (or authorization), calling into question the need for some hierarchical administrative structure that can be a major impediment to agile response. It would be regenerative in that it restores agency to the local group or community responding to a challenge.

I think of Indigenous AI as a form of Regenerative AI that must, by definition, be locally driven. Tribal communities are challenged to develop their own roadmap for designing and deploying AI systems that facilitate a local culturally framed knowledge system that is, at the same time, a subsystem of the global knowledge ecosystem.

AI tools can be designed to support processes that preserve local agency (sovereignty) with respect to preservation of social/cultural practices and understandings, ethical norms, and factors associated with social identity management while facilitating local data-informed problem solving and decision making. It’s amazing to think that a combination of AI and other new and emerging technologies can be employed in service of such goals. But they can only be possible if driven by the community.

The nation’s Tribal Colleges and Universities (TCUs) are perfectly positioned to engage Tribal communities in this work. TCU undergraduates from their Tribal communities are the mother lode in this scenario. They can acquire AI development skills, engage community members (including parents, grandparents, elders) in participatory design projects, coordinating with partners within the AI research community. ASU, Navajo Technical University, Diné College and Salish Kootenai College are developing an AI capacity-building model in partnership with the National Research Platform that would facilitate local AI while taking advantage of resources and fostering collaborations across the AI research community. And possibly provide a model for Indigenous communities worldwide.

Sean Dudley

Sean Dudley serves as the chief research information officer and associate vice president for research technology at Arizona State University.

I have evaluated AI on many occasions over the years, both as enterprise lead for research technology at ASU and as a fan of science and technology stretching back as far as I can remember. My experience with AI had been routine in the past: immerse myself in the available technologies and evaluate them critically before moving on. Yet, while developing our recent article for the Tribal College Journal, I found myself embarking on a series of additional exercises that changed the way I view these technologies and the opportunities they represent.

To set the stage, my coauthor Al Kuslikis and I decided our goal was to produce an article that readers would find grounded yet hopeful. I feel we achieved this, and I am grateful for his partnership and that of our other collaborators as we worked our way toward this end.

Further in our development work, we began creating demonstrations with the goal of revealing the inner workings of contemporary AI through an Indigenous lens. I thank my co-author and Indigenous faculty colleagues across ASU and elsewhere for the support and insight that allowed us to design these. As I applied our demonstrations across spoken, text-based, and graphic design AI platforms, further insights were revealed.

During this journey, AI platforms that generate imagery were some of the most telling. The task seemed simple at first: prompt AI to generate images of a contemporary Indigenous community. When I proceeded, however, I was presented with imagery of deeply rural areas that lacked plumbing, electricity, or paved roads.

In the article (and in the header image above) you will see where this journey led, with its series of further twists and turns.

I also prompted AI to render depictions of artificial intelligence benefitting Indigenous communities. The results were artistic, yet without example. Despite hours of effort to engineer a prompt that would reveal something more profound, nothing changed.

It was then that it occurred to me that the AI, unable to produce any examples, was indicating something hopeful — that the stories of Indigenous AI are primarily yet to be written.

Leonard Bruce

Leonard Bruce is an Indigenous storyteller, community technologist, and member of the Gila River Indian Community, weaving history, culture, and innovation to empower his people. From digitizing archives to exploring AI's potential, his work bridges tradition and technology to share stories and shape the future.

As both an Indigenous man and an AI policy nerd, reading the Tribal College Journal article on AI and Indian Country got me excited about how this technology could be leveraged in our communities. I’m grateful the article doesn’t shy away from exploring the pitfalls of AI while also leaving space for how our communities can actively shape its implementation for our people. It’s a powerful reminder that, while AI carries risks, it also holds opportunities — if we take the lead in its development and application.

One thing that stood out to me was the idea of making AI systems transparent and explainable. The article highlights the importance of models developed with principles from Indigenous data experts, but I wonder: how does that actually work in practice? Transparency sounds great in theory, but auditing and understanding these systems — especially at the community level — is a huge challenge. It’s not enough for AI systems to provide explanations; our communities need the tools and training to interpret those explanations, ask critical questions, and evaluate whether a system aligns with our values.

I loved the discussion of “Indigenous AI” principles, like the Indigenous Protocol and Artificial Intelligence (IPAI) design principles and the American Indian Higher Education Consortium’s (AIHEC) evaluation framework. These frameworks are amazing ideals, grounded in Indigenous values. But they also raise critical questions: How do we turn them into action? How do we ensure our communities can implement and sustain these practices over time? More importantly, how do we ensure they are not just symbolic guidelines but living, actionable principles that shape the technologies affecting us?

Big tech companies don’t have the best track record when it comes to honoring diverse principles. I worry that in a world dominated by tech oligarchs, they won’t take Indigenous AI principles seriously. That’s why it’s essential for us not only to have a seat at the table but also to build capacity in our own communities. We need to understand how AI is made, how bias creeps in, and how we can design systems that reflect our values. This knowledge is critical not only when we purchase or implement AI systems but also when these systems are deployed against us—whether in education, policing, or healthcare.

I believe the answer lies in going local. Tribal colleges and Indigenous communities can lead the way by building innovative partnerships and fostering homegrown expertise. For example, creating tribal AI labs or innovation hubs could provide spaces to train future AI leaders, conduct research, and design culturally grounded tools. AI could help preserve Indigenous languages, support tailored education programs, and address bias in AI systems. Tackling bias is particularly critical — tribally led initiatives can ensure systems are trained on data that reflects our values and avoids harmful stereotypes or exclusions.

Finally, the article left me thinking about the stories we tell about AI. Too often, we hear dystopian narratives — robots taking jobs or AI systems running amok. But where are the stories that imagine AI strengthening our sovereignty, culture, and traditions?

We need to dream up futures where technology nourishes and empowers our people. These stories can inspire action and shift the focus from fear to possibility. AI is here, and it’s moving fast. We can’t just react to it—we need to prepare and lead the way.

You can read the original article at: Sean Dudley and Al Kuslikis (2024). Opportunity and Risk: Artificial Intelligence and Indian Country. Tribal College: Journal of American Indian Higher Education, Volume 36, No. 2 - Winter 2024.

And please consider donating here to support the Tribal College Journal

Thanks for this thoughtful piece. An example of the language preservation use case here in Canada: https://www.businesswire.com/news/home/20240626907117/en/Camb.ai-and-Seeing-Red-Media-Forge-Groundbreaking-Partnership-to-Preserve-Indigenous-Languages